Author: Stavros Farsedakis

Mentor: Dr. Hong Pan

Pine Crest School

Abstract

Everyone’s talking about Al, but no one’s talking about its hidden cost. We think of Al as this invisible “cloud,” but it’s built on a massive network of data centers guzzling energy, water, and hardware. The stats are wild: a single data center can use as much electricity as a whole city, and training one AI model can burn through enough energy to power over 100 homes for a year. On top of that, these places use billions of gallons of water for cooling, a serious problem in a world dealing with droughts. The tech gets old super fast, creating a mountain of e-waste five times faster than we can recycle it.

This paper exposes the shady side of Al, where companies hide their environmental impact behind outdated metrics and a total lack of transparency. But it’s not all bad news. We’re also exploring how we can fix this mess with cool tech like “Green Al,” new policies, and a shift to renewable energy. It’s time to make Al not just smart but sustainable.

Introduction

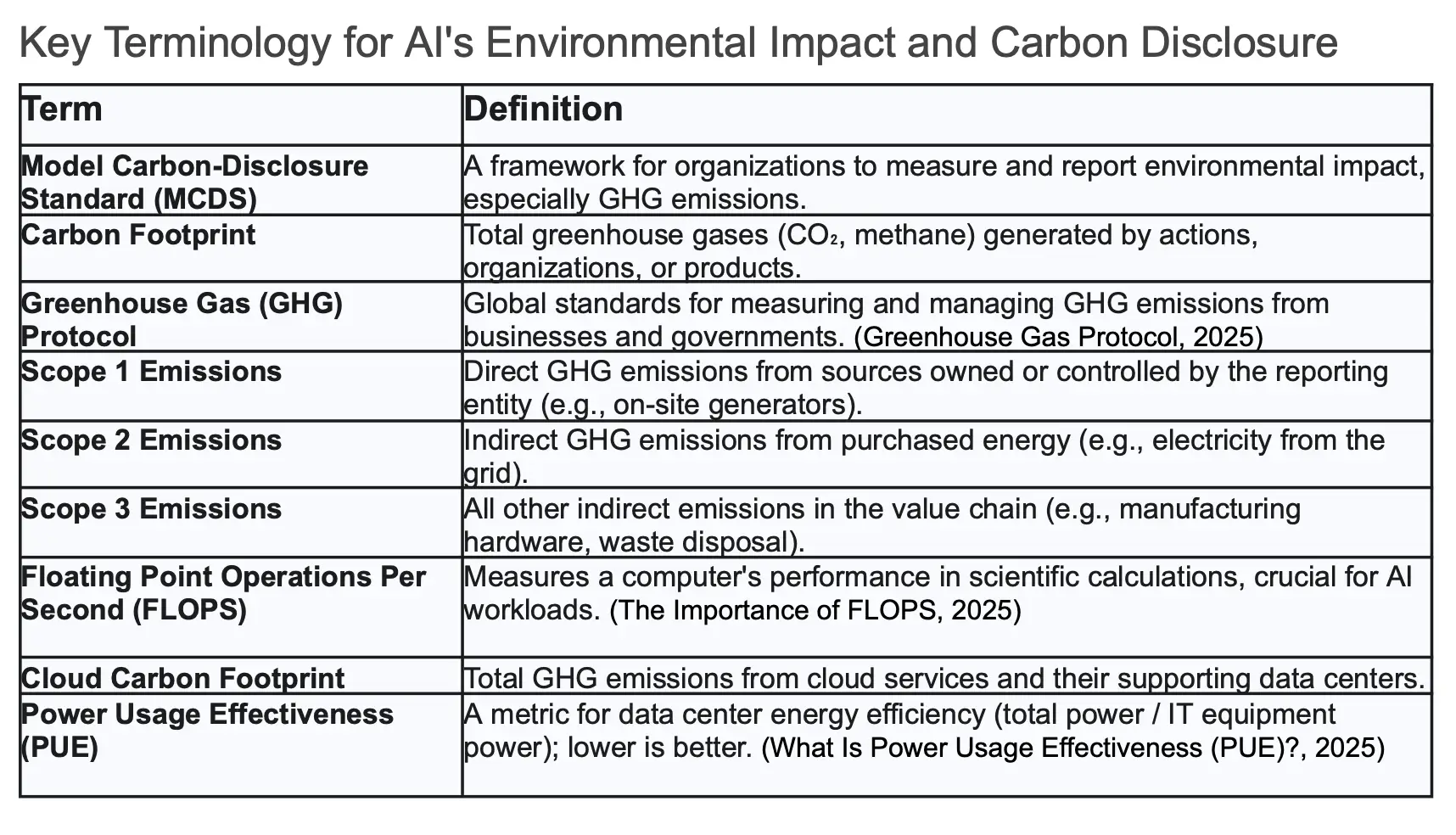

Artificial intelligence has become a powerful technology in our world, but its true environmental cost is often never shown. While we interact with AI through the concept of cloud computing, this technology is built on a physical network of large data centers that use massive amounts of resources. As AI models have grown, a significant problem has emerged: developers are not required to disclose the energy or carbon footprint of their systems. This lack of transparency makes it difficult for anyone to understand the full environmental impact. To address this gap, this paper proposes a simple “nutrition label” for AI models, which is called the Model Carbon-Disclosure Standard (MCDS). This label would show key metrics like the energy consumed and carbon generated, making the environmental cost clear and comparable.

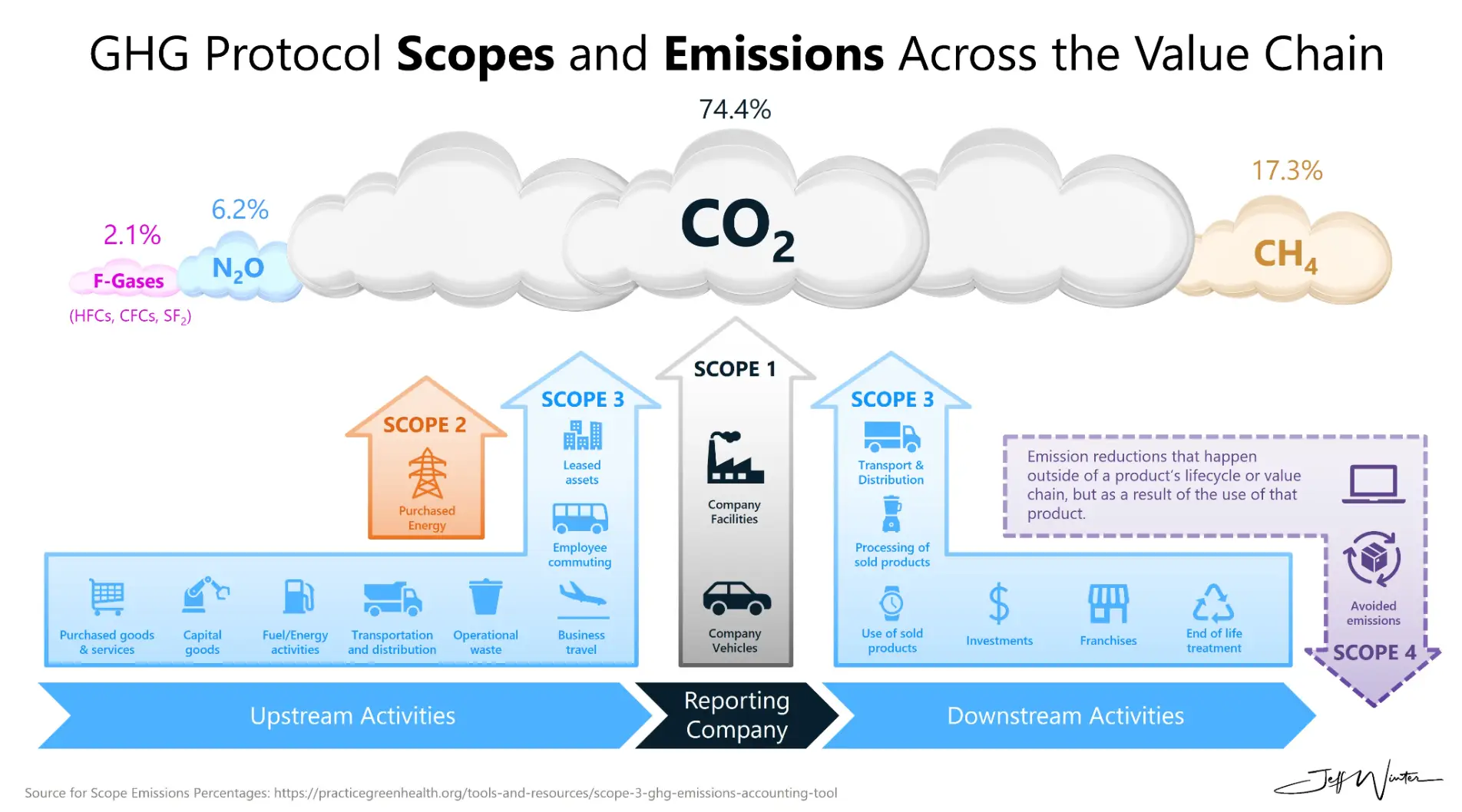

The idea of a disclosure framework is not new. The Greenhouse Gas (GHG) Protocol gives a global way to measure emissions, and groups like the Carbon Disclosure Project (CDP) encourage companies and governments to share their environmental impacts with investors and the public. As the market demands more of this transparency, it becomes increasingly important for a company’s financial and competitive standing.

The environmental footprint of computing has a history that has evolved over time. In the early days, concerns were mostly focused on the toxic byproducts from manufacturing devices. With the growth of large data and cloud computing, attention turned to the heavy energy and water consumption of data centers, real buildings that the term “cloud” often hides. Globally, the number of data centers has exploded in just the past decade. (“Measuring AI’s Energy/Environmental Footprint to Access Impacts, ” 2025)

The emergence of AI has dramatically accelerated these trends. AI tasks are far more energy-intensive than traditional computing. Training large AI models, for instance, requires a tremendous amount of energy, and even a single question to a chatbot like ChatGPT can use far more electricity than a normal Google search. Since this energy demand is so intense and unpredictable, AI is considered an environmental risk whose full impact is difficult to measure. This paper will explore these challenges in detail and outline a path toward a more transparent and sustainable future for AI.

Current Status

Artificial intelligence, often thought of as an invisible “cloud, ” is, in reality, built upon a very real and physical foundation of massive data centers. As AI technology becomes more advanced and common in our daily lives, its environmental footprint, in the form of energy, water, and waste, is growing at a rapid pace, creating significant new challenges.

The Energy Appetite of AI and Data Centers

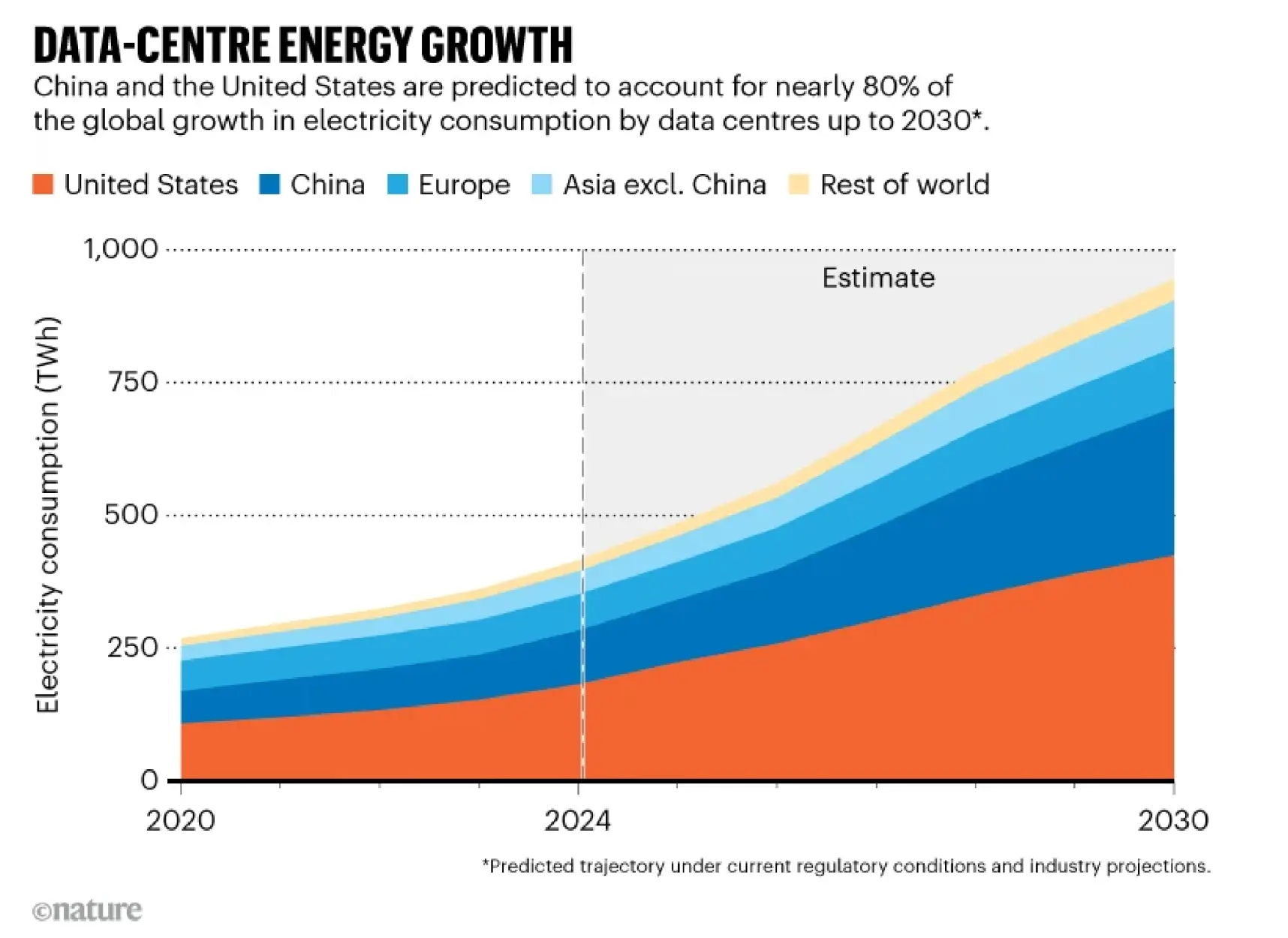

The energy consumption of these data centers, which had been fairly steady for many years, has recently surged because of the boom in AI. In 2023, data centers used about 4.4% of all electricity in the United States, a number that is projected to double or even triple by 2028, reaching up to 12% of the nation’s total electricity demand. (Increase in Electricity Demand from Data Centers, 2024) To put that into perspective, by 2030, a large data center could use as much electricity as an entire city, and globally, data center electricity use is expected to more than double by the end of the decade. This unexpected and fast growth is putting pressure on power grids, and sometimes utilities keep old, polluting coal plants running longer to meet the demand.

AI tasks use far more energy than traditional computing. Training a large AI model like GPT-3, for instance, consumed enough energy to power about 120 average U.S. homes for a full year. This process also generated a carbon footprint equal to the yearly emissions of 123 gasoline-powered cars. For more common uses, a single question to a chatbot like ChatGPT can use nearly 10 times the electricity of a normal Google search. When you get into more complex tasks, the energy use skyrockets. Creating a five-second AI video, for example, can use about as much electricity as keeping a TV on all day.

Despite these impacts, the industry’s environmental footprint is very opaque due to a lack of clear and consistent reporting. For example, companies often use outdated metrics like Power Usage Effectiveness (PUE) that only measure a facility’s efficiency and not how efficiently the actual computer hardware is working. This means a data center can seem efficient on paper while still being very wasteful. A major issue is how companies report their emissions under the Greenhouse Gas (GHG) Protocol, a global framework for measuring emissions, which are categorized into Scope 1 (direct), Scope 2 (from purchased electricity), and Scope 3 (from the value chain, like manufacturing). The purchase of renewable energy credits can hide a company’s real emissions and make them seem much greener than it is. One analysis of a major company’s 2022 data found that while its publicly reported emissions were only 273 metric tons of carbon, its actual emissions from the local power grid were over 3.8 million metric tons, a difference of more than 19,000 times. This gap in reporting creates a situation where companies are not encouraged to focus on energy efficiency because there are no clear standards to hold them accountable.

One powerful solution to this challenge is a proactive approach to energy sourcing. The Massachusetts Green High Performance Computing Center in Holyoke is an excellent example. This data center is primarily powered by a nearby hydroelectric station, which creates a direct and reliable source of clean energy from the very beginning, rather than relying on a power grid that is heavily dependent on fossil fuels.

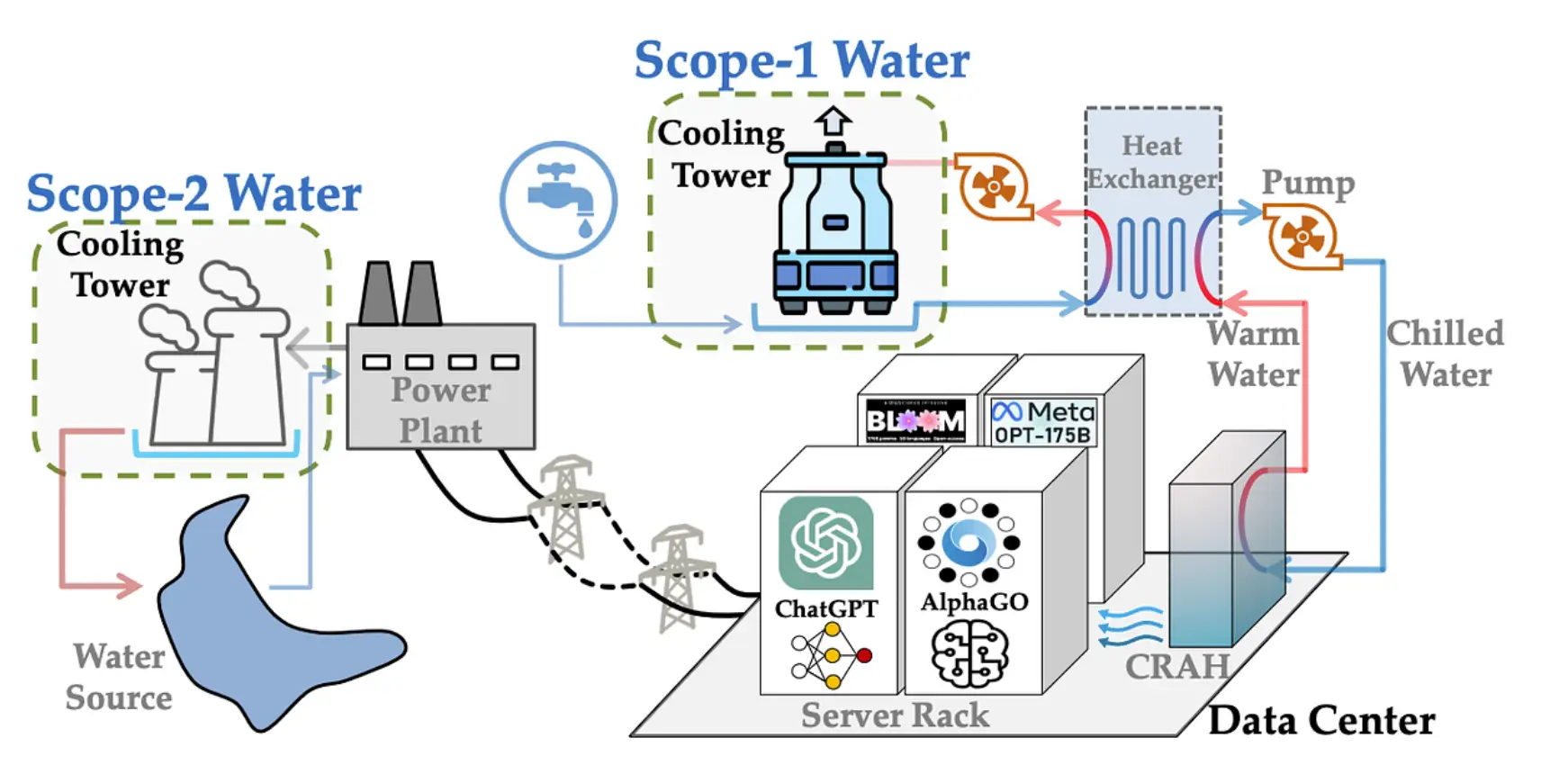

AI’s Thirst for Water

AI’s energy demands also create a huge need for water. The powerful computer chips in data centers generate enormous heat, and water is used for cooling to prevent them from breaking down. A single large data center can consume up to 5 million gallons of water every day, which is enough to supply a town of 10,000 to 50,000 people. To show this on a larger scale, Google’s global data centers consumed about 4.3 billion gallons of water in one year, enough to give every person in the United States about 13 gallons. This consumption becomes especially serious in water-stressed regions. In Texas, where the state has been dealing with a severe drought, data centers are projected to use nearly 400 billion gallons of water by 2030, which represents almost 6.6% of the state’s total water usage. While residents are asked to cut back, these new facilities use millions of gallons daily with little public notice. (Texas Data Centers Use 50 Billion Gallons of Water, 2025) As one water policy analyst noted, there is often no requirement for data centers to talk to communities about their water use, which hides much of their environmental impact.

Some innovators are tackling this problem head-on. In Finland, a country with a cold climate, a data center has been designed to operate without traditional mechanical cooling systems. Instead, it uses a system that relies on cold outdoor air or even seawater from the Baltic Sea to cool its servers, which dramatically reduces its energy and water footprint. Even more impressively, the waste heat from the servers is captured and sent to a local district heating network to warm nearby homes and businesses. This creates a win-win situation where the data center not only reduces its own impact but also helps the community use less fossil fuels for heating.

The E-waste Challenge

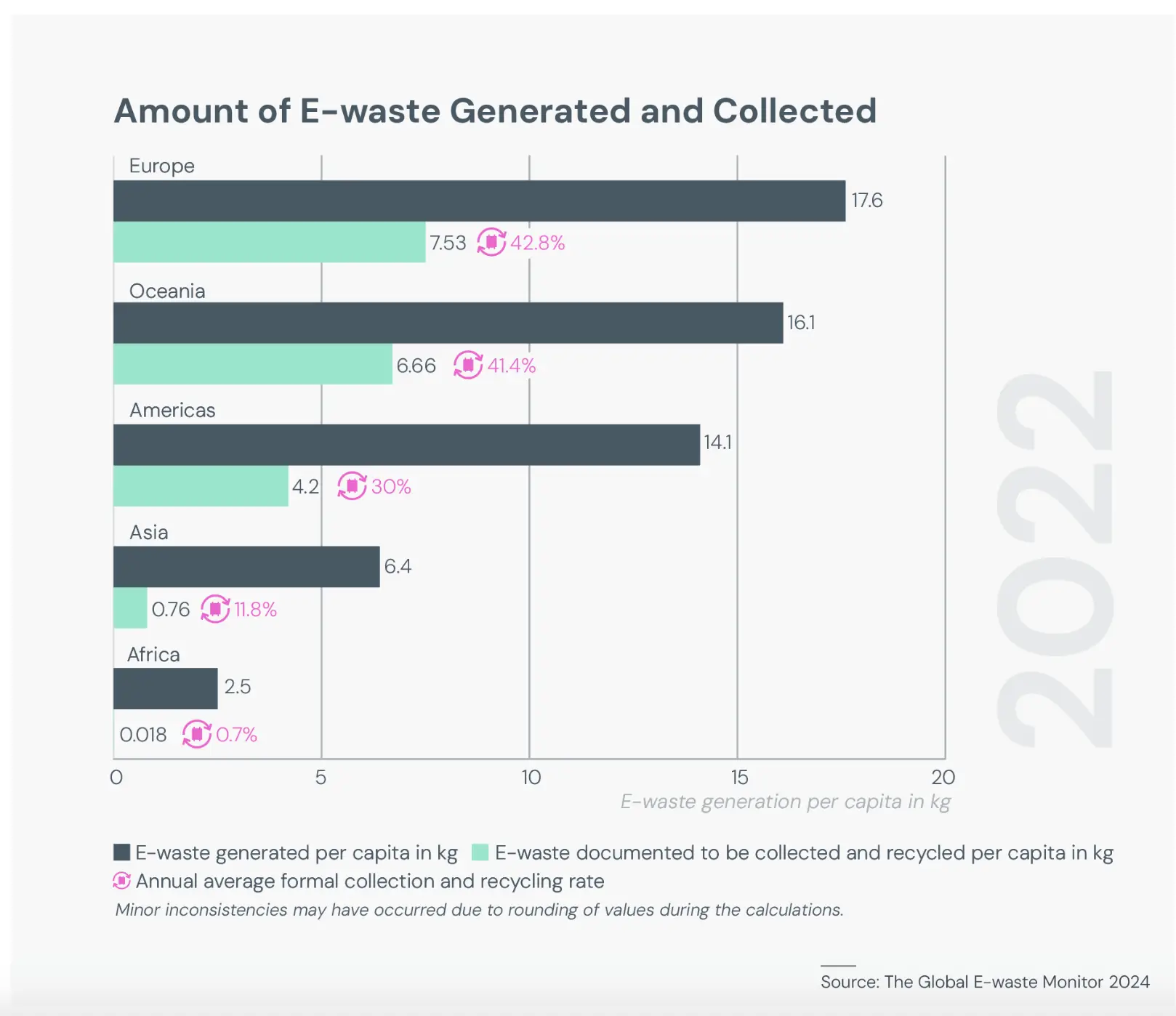

The rapid pace of AI innovation has created a competition for faster, more powerful computer hardware. This constant cycle of upgrades and replacements is creating a global electronic waste (e-waste) problem. The manufacturing of this hardware also has its own carbon emissions, which are part of a company’s Scope 3 emissions under the GHG Protocol.

According to the U.N., global e-waste reached a record 62 million metric tons in 2022, equal to the weight of more than 150 Empire State Buildings. This problem is getting worse, as e-waste is growing nearly five times faster than recycling efforts can keep up. The total amount of e-waste is projected to grow to 82 million tons by 2030. (ewastemonitor, 2024) In a high-usage scenario, the spread of large language models alone is expected to generate an extra 2.5 million tons of e-waste annually by 2030. This waste is especially dangerous because it contains harmful materials like lead and mercury that can damage both human health and the environment if not properly handled.

Adding to the problem is a significant lack of transparency. Only about a quarter of data center operators track what happens to their retired hardware, and even fewer measure the e-waste they generate. This data gap means that tons of valuable and hazardous equipment often end up in landfills, and there is little accountability or incentive for companies to improve their practices.

Discussion

While the environmental challenges of AI are significant, a new movement is underway to build a more sustainable future for this technology. This effort involves a combination of smarter technology, better operational strategies, and new rules to guide the industry.

Innovations in “Green AI”

The movement toward “Green AI” starts with making the technology itself more efficient. A key part of this is model optimization, where techniques like pruning (removing unnecessary parts of a model) and knowledge distillation (transferring learning from a large model to a smaller one) dramatically reduce the energy needed for AI workloads. For example, researchers have developed tools that can predict a model’s accuracy early in its training, which can save up to 80% of the computing power that would have otherwise been used on a less effective model.

Developers are also creating new, energy-efficient hardware. Beyond traditional GPUs, new types of chips, such as neuromorphic and optical processors, are being designed to run AI tasks with far less power. Additionally, a method called “power capping” can be used to limit the electricity sent to processors, which can cut energy use by about 20% with no loss in performance.

Smarter operational strategies are also key. This includes scheduling large computing tasks to run at night when energy demand on the grid is low, or distributing workloads across different time zones to use power when renewable energy like wind and solar is most available. It also means using simpler AI models when they are sufficient for a task, such as a model that runs locally on a user’s device instead of one in a massive data center. (AI Has High Data Center Energy Costs — but There Are Solutions, 2025)

The Role of Renewable Energy and Advanced Cooling

To power the vast data centers that form the backbone of AI, a global shift to clean energy is crucial. Experts predict that by 2030, about half of the electricity used by data centers will come from renewable sources. (Energy Supply for AI, 2025) AI itself can even assist in this transition by forecasting how much renewable energy will be produced at any given time, allowing for better energy management.

Cooling is a great part of a data center’s energy and water consumption, so new solutions are arising here as well. Advanced cooling systems like liquid cooling are thousands of times more efficient at removing heat than air, allowing for more powerful hardware in a smaller space while using less energy. Another smart strategy is placing data centers in naturally cold climates, like in Finland, to use outside air or cold seawater for “free cooling” .

Policy and Standardization Efforts

For these solutions to have a global impact, they must be backed by clear rules and standards. Over 190 countries have agreed on guidelines for ethical AI, including its environmental aspects. Both the European Union and the United States have introduced legislation aimed at managing AI’s environmental footprint. A U.S. Executive Order, for instance, directs the Department of Energy to create reporting requirements for data centers that cover a technology’s full lifecycle, from manufacturing to disposal.

These efforts aim to create new, transparent metrics for the industry. The “AI Energy Score” is one idea, which is a simple, star-based rating system to show how energy-efficient an AI model is for a specific task. The International Organization for Standardization (ISO) is also preparing new standards for “sustainable AI” that will cover energy, water, and materials. The goal of these policies is to require developers and companies to measure and publicly share their environmental impacts and to integrate these metrics into existing sustainability reports like the Greenhouse Gas (GHG) Protocol.

Conclusion

The rapid growth of AI is driving a significant surge in demand for energy, water, and hardware; however, our ability to measure and manage this impact is often hindered by a lack of transparency. The industry has frequently used outdated metrics or misleading reporting, which makes it hard to hold companies accountable for their actual environmental footprint. This has created a situation where companies are not strongly motivated to make their models more energy-efficient because there are no clear standards to do so. AI affects many areas, including energy use, water supplies, and e-waste, and these impacts grow as AI models run constantly and get upgraded quickly.

The path forward requires an integrated approach that combines new technology with clear policy. We can make AI more sustainable by using smarter model designs, more efficient hardware, and innovative cooling methods. At the same time, policies that require companies to provide clear and honest information about AI’s environmental impact are essential to create balanced conditions and hold companies accountable. This combined effort is vital to ensure that the AI revolution is not only powerful and transformative but also sustainable for our planet and future generations.

References

AI has high data center energy costs—But there are solutions. (2025, January 7). https://mitsloan.mit.edu/ideas-made-to-matter/ai-has-high-data-center-energy-costs-ther e-are-solutions

Chen, S. (2025). Data centres will use twice as much energy by 2030. Nature. https://doi.org/10.1038/d41586-025-01113-z

Electronic Waste Rising Five Times Faster than Documented E-waste Recycling. (2024). https://unitar.org/about/news-stories/press/global-e-waste-monitor-2024-electronic-waste -rising-five-times-faster-documented-e-waste-recycling

Energy supply for AI. (2025). IEA. https://www.iea.org/reports/energy-and-ai/energy-supply-for-ai ewastemonitor. (2024, March 20). The Global E-waste Monitor. E-Waste Monitor. https://ewastemonitor.info/the-global-e-waste-monitor-2024/

GHG Protocol Scopes and Emissions Across the Value Chain. (2024, February 6). Jeff Winter. https://www.jeffwinterinsights.com/insights/scope-emissions-overview

Greenhouse Gas Protocol. (2025). https://ghgprotocol.org/

How much water does AI consume? (2023, November 30). https://oecd.ai/en/wonk/how-much-water-does-ai-consume

Increase in Electricity Demand from Data Centers. (2024, December 20). Energy.Gov. https://www.energy.gov/articles/doe-releases-new-report-evaluating-increase-electricity-d emand-data-centers

Measuring AI’s Energy/Environmental Footprint to Access Impacts. (2025). Federation of American Scientists. https://fas.org/publication/measuring-and-standardizing-ais-energy-footprint/

Texas data centers use 50 billion gallons of water. (2025). Newsweek. https://www.newsweek.com/texas-data-center-water-artificial-intelligence-2107500

The Importance of FLOPS. (2025). https://www.lenovo.com/us/en/glossary/flops/

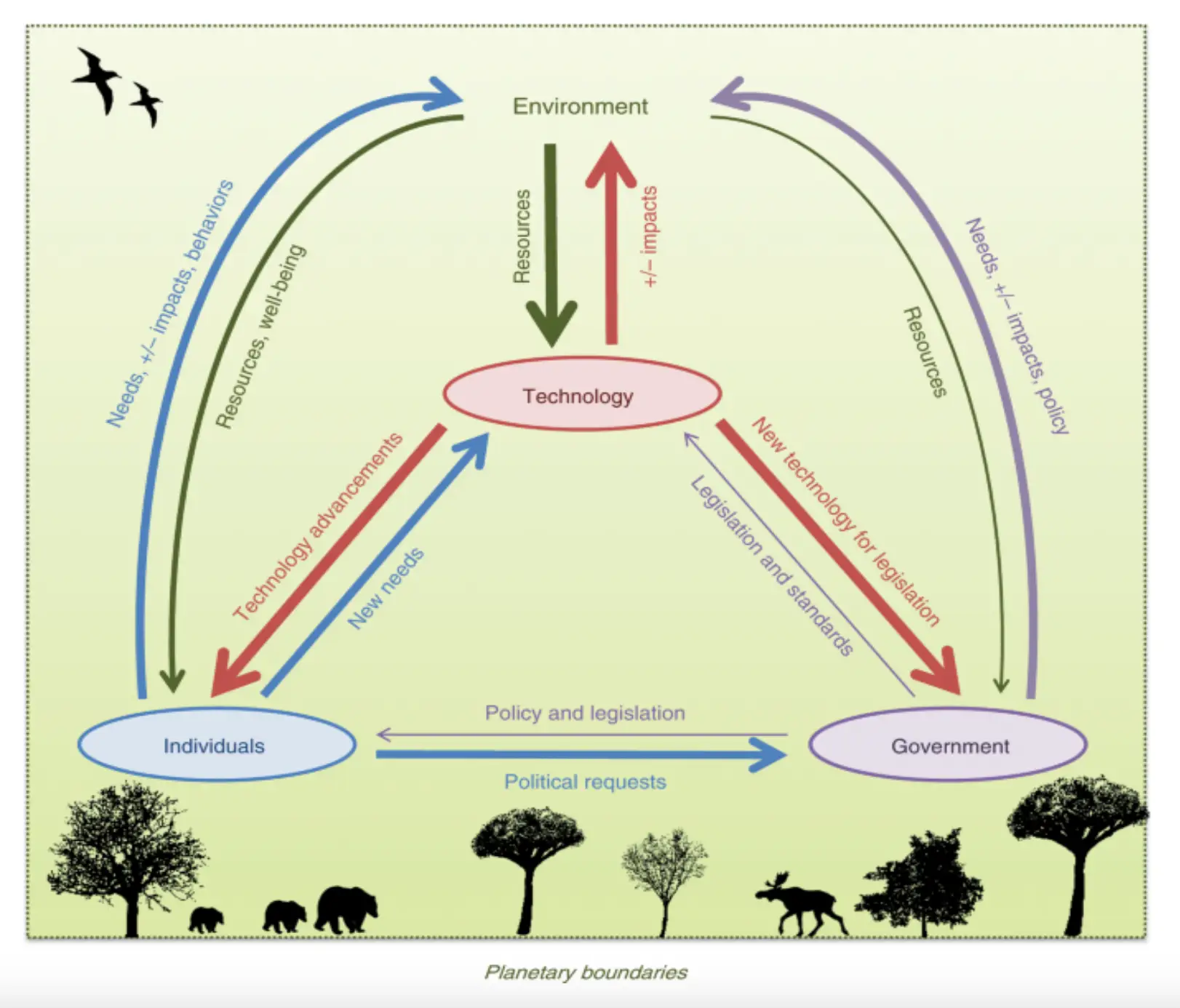

Vinuesa, R., Azizpour, H., Leite, I., Balaam, M., Dignum, V., Domisch, S., Felländer, A., Langhans, S. D., Tegmark, M., & Fuso Nerini, F. (2020). The role of artificial intelligence in achieving the Sustainable Development Goals. Nature Communications, 11(1), 233. https://doi.org/10.1038/s41467-019-14108-y

What is Power Usage Effectiveness? (2025). https://www.www.digitalrealty.com/resources/articles/what-is-power-usage-effectiveness ?t=1755978324820?latest

About the author

Stavros Farsedakis

Stavros’ academic interests center on computer science and artificial intelligence, especially exploring how technology impacts the environment. Outside the classroom, he enjoys coding projects and researching ways to make AI more sustainable and efficient.