Author: Vedanth Ramji

Mentor: Dr. Vincent Boudreau

APL Global School

Abstract

Advances in deep learning and computer vision have given rise to algorithms and techniques that can help us understand the content of images with more accuracy, efficiency and precision than previously attainable. Innovation in microscopy has also introduced expansive microscope image data sets that require analysis, and here, computer vision techniques have played a key role in revolutionising the field of microscopy and live-cell imaging.

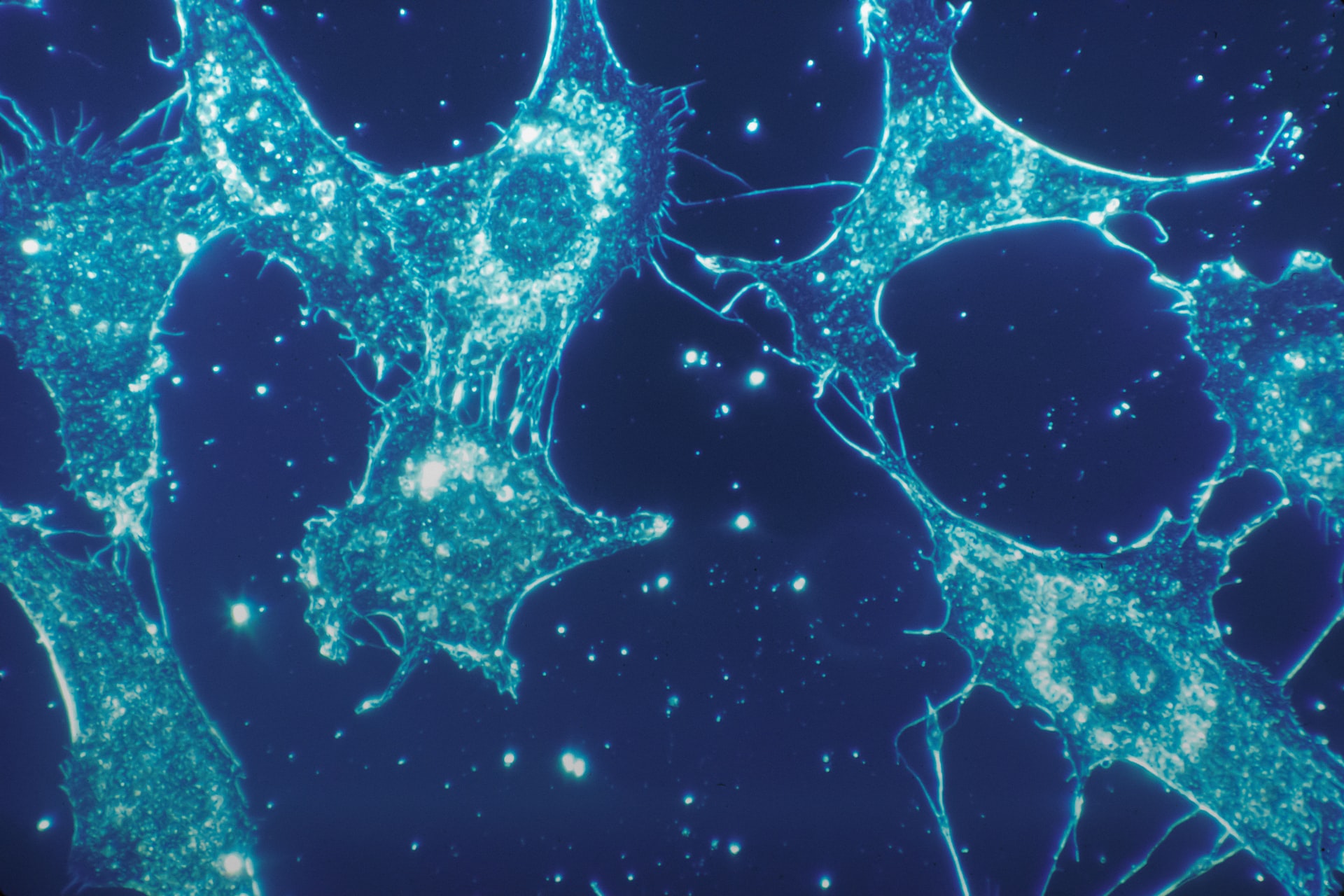

Live-cell imaging and image segmentation have played a crucial role in understanding biological problems such as transcription regulation in bacterial and eukaryotic cells (Van Valen et al., 2016). Image segmentation is an important component in most live-cell imaging experiments, as it allows us to identify unique parts of an image which, for instance, can be useful to analyse the behaviour of individual cells or to identify and differentiate between cells in close proximity. Computer vision techniques, such as deep convolutional neural networks (a supervised machine learning model), enhance image segmentation by reducing the manual curation of images, making it easier for labs to share solutions and significantly increasing segmentation accuracy.

In this paper, we will discuss how computer vision techniques are used to tackle problems in different aspects of live-cell imaging. We will also highlight how deep convolutional neural networks are used in image segmentation.

Introduction

Artificial intelligence has made processing data more precise, fast and efficient. It has also proven to be a beneficial tool in many different fields. This is especially true in microscopy, particularly live-cell imaging. Live-cell imaging is a process by which living cells are imaged over time using light microscopy to understand biological systems in action. Computer vision, a subset of artificial intelligence that enables computers to procure meaningful information from images and videos, can be applied to microscope images or movies from live-cell imaging experiments to derive information about living systems.

A critical part of live-cell imaging data analysis is image segmentation. Image segmentation refers to par- titioning an image into distinct and meaningful segments. Thresholding, Voronoi algorithms and watershed transform are commonly used techniques and tools for image segmentation (Moen et al., 2019). However, they all have three principal challenges: curation time, segmentation accuracy and solution sharing. As dif- ferent research groups use highly specific combinations of the above-mentioned image segmentation methods for unique segmentation problems, it is difficult for findings and solutions to be shared across labs. Many of these methods also require considerable amounts of manual curation and even then the segmentation might be inaccurate. Deep convolutional neural networks address these three key issues.

Two other important areas of application of live-cell imaging are image classification and object tracking. Image classification is the task of giving a label to an image that is meaningful. An example of image classification is to identify whether a protein is being expressed in the cytosol or the nucleus using fluorescence. Many image classifier architectures share many similarities with commercial image classifiers as well, hence, the challenge in image classification usually lies in procuring well annotated biological datasets (Moen et al., 2019).

Object tracking is the task of following objects through a time-lapse movie. An example of object tracking is the tracking of single cells in a live-cell imaging movie as they exhibit a phototropic response. As object tracking analysis requires cells to be present, identified and differentiated in every frame of a time-lapse movie and phototoxicity and photobleaching limit the frame rate and clarity of the movie, object tracking can usually be quite challenging. However, successful solutions and methods have made object tracking useful in understanding bacterial cell growth and cell motility (Moen et al., 2019 and Kimmel, Chang, Brack & Marshall, 2018)

Deep convolutional neural networks or conv-nets are supervised machine learning models that can be used to solve large-scale image segmentation problems. Supervised machine learning models are trained using well-defined, labelled data sets that can then be applied to new data (Supervised Learning, 2020). Conv-nets have shown to be incredibly useful for image segmentation and can be applied to many different problems from cell type prediction to quantifying localization-based kinase reporters in mammalian cells (Van Valen et al,. 2016).

Discussion

Augmented Microscopy

Another major application of live-cell imaging is augmented microscopy. Augmented microscopy refers to extracting information from biological images that is usually latent. An example of augmented microscopy is identifying the locations of cell nuclei and other large structures from bright-field microscope images. However, augmented microscopy is not limited to light microscopy images with fluorescent traces. It can be used to improve image resolution and minimise phototoxicity in real-time (Moen et al., 2019; see also Sullivan et al,. 2018).

Mathematical Construct of Conv-nets

Conv-nets are composed of two main components: dimensionality reduction and classification (Van Valen et al., 2016). Dimensionality reduction refers to constructing a representation of an input image with lower dimensions using three key operations. We may denote the input image as I, then convolve the input image with a set of filters, which can be denoted as {f1, …, fn}, to get the filtered images {I ∗f1, …, I ∗fn}. After the image is filtered, a transfer function t(x) to produce a set of feature maps {t(I ∗f1), …, t(I ∗fn)}. For example, the rectified linear unit, relu, can be used as a transfer function. It is defined as relu(x) = max(0, x). The third operation is optional, and it scales down the previously created feature maps to a smaller spatial scale, by replacing a section of the feature maps with the largest pixel value in that particular section (Convolutional Implementation of the Sliding Window Algorithm, n.d.). This is known as max pooling. The final output of dimensionality reduction (assuming max pooling was performed) is a set of reduced feature maps.

The second component of a conv-net – classification – uses a neural network that takes the reduced feature maps and assigns them class labels, by the use of matrix multiplication and a transfer function to construct a nonlinear mapping.

Realizing Image Segmentation as Image Classification

For our purpose of live-cell imaging, we may manually annotate each pixel of a microscope image as cell interior, cell boundary or non-cell. Then, by sampling a small region around each pixel and marking each region as any one of the previously mentioned three classes, a training data set can be created. Now the task of image segmentation has been reduced to obtaining a classifier that can distinguish between the three different types of pixels – converting our task of image segmentation to image classification. Then, the classifier can be applied to other data sets.

Work from Van Valen and colleagues (2016) shows that conv-nets can precisely segment mammalian and bacterial cells when properly trained. They showed that conv-nets can reliably segment the following cell types/lines: E. coli (bacterial cells), MCF10A (human breast cells), HeLa-S3, RAW 264.7 (monocytes) and BMDMs (bone marrow-derived macrophages). Conv-nets, therefore, can be used on a wide variety of cells which makes it a convenient solution for segmenting microscope images.

Using Conv-nets to Analyse Bacterial Growth

Single-cell bacterial growth curves have played a crucial role in our understanding of concepts such as metabolic co-dependence in biofilms. The data for single-cell bacterial growth curves have been traditionally created by imaging microcolonies growing in a medium such as agar or using bulk populations of cells in a Coulter counter. It is challenging to separate and identify individual cells in a medium due to the proximity of the growing cells and more importantly, the limit in the resolution of traditional methods. As we collect more single-cell information, the complexity of the data sets grows. Image segmentation becomes essential to follow large numbers of single cells over several frames of a time-lapse movie.

Conv-nets can effectively segment these images. This allows us to analyse time-lapse movies of growing cells and track the growth of each cell by determining the increase in the area it takes up in the image. These growth curves can then be used to create instantaneous growth rates for single cells. Researchers have also shown that these can be used to create a spatial heat map that is colored according to the growth rates (Van Valen et al., 2016). Therefore, conv-nets allow the quantitative measurement of single-cell dynamics at a high resolution. Spatial heat maps can also provide deeper insights into how individual cells grow such as the relationship between the position of the cell and its growth rate.

Conv-nets and Predicting Cell Types

Semantic segmentation is the process by which each pixel in an image is labelled with a corresponding class (Jordan, 2018). This method allows the prediction of the contents of an image while segmenting the image itself. Researchers have shown that if conv-nets are modified to recognize differences between the intracellular structures of two different types of cells, conv-nets can perform semantic segmentation.

For example, images of NIH-3T3 and MCF10A cells show different morphologies under phase contrast. Work from Van Valen and colleagues (2016) demonstrated that modifying the conv-net architecture by increasing the number of classes it detects from three to four, enables the conv-nets to be able to recognize the differences in the interior of these two cell types. Then, a training data set was created from different images of NIH-3T3 and MCF10A cells with each image having the nuclear marker Hoechst 33342 (used to stain the nuclei of cells). A segmentation mask can be created to differentiate between these two cell lines and the conv-net can be used on other datasets. Previous experiments using the same method have shown that conv-nets have an accuracy of 95% when differentiating between NIH-313 and MCF10A cells.

This can have a wide range of uses such as helping diagnoses and furthering our understanding of cellular dynamics in tissues.

Conclusion

Computer vision has proven to be a crucial tool for analysing microscope images and it will certainly be a very important tool for further studies. More efforts to create larger curated datasets of biological images would greatly improve existing computer vision and deep learning tools for live-cell imaging.

Conv-nets are already able to segment a wide variety of cells and with time they will be trained to segment more. This offers researchers a convenient solution to quickly segment different types of cells. Conv-nets have also been shown to be precise and it allows labs to share their work faster with one another as conv- nets generalise the segmentation method which previously was very specific for different cases. Conv-nets and other computer vision tools will also become more accessible to people due to the availability of deep learning and computer vision APIs such as Keras and TensorFlow, therefore its application will become easier as time goes on. However, as the complexity of microscopy datasets grows, conv-nets may become less effective. Techniques to optimise conv-nets and other methods such as data-frugal deep learning could be a solution to this (Landram, 2022).

Computer vision will certainly be one of the main driving forces of new microscopy discoveries and will have a transformative impact on the field.

References

- Van Valen DA, Kudo T, Lane KM, Macklin DN, Quach NT, DeFelice MM, et al. (2016) Deep Learning Automates the Quantitative Analysis of Individual Cells in Live-Cell Imaging Experiments. PLoS Comput Biol 12(11): e1005177. https://doi.org/10.1371/journal.pcbi.1005177

- Moen, E., Bannon, D., Kudo, T. et al. Deep learning for cellular image analysis. Nat Methods 16, 1233–1246 (2019). https://doi.org/10.1038/s41592-019-0403-1

- Supervised Learning. (2020, August 19). IBM Cloud Learn Hub. Retrieved August 7, 2022, from https://www.ibm.com/cloud/learn/supervised-learning

- Convolutional implementation of the sliding window algorithm. (n.d.). Medium.Com. Retrieved August 7, 2022, from https://medium.com/ai-quest/convolutional-implementation-of-the-sliding- window-algorithm-db93a49f99a0

- Landram, K. (2022, January 6). Data-frugal Deep Learning Optimizes Microstructure Imaging. Carnegie Mellon University. Retrieved August 7, 2022, from http://www.cmu.edu/news/stories/ archives/2022/january/deep-learning.html

- Jordan, J. (2018, May 21). An overview of semantic image segmentation. Jeremy Jordan. Retrieved August 7, 2022, from https://www.jeremyjordan.me/semantic-segmentation/

- Sullivan, D. P., & Lundberg, E. (2018). Seeing More: A Future of Augmented Microscopy. Cell, 173(3), 546–548. https://doi.org/10.1016/j.cell.2018.04.003

- Kimmel, J., Chang, A., Brack, A., & Marshall, W. (2018). Inferring cell state by quantitative motility analysis reveals a dynamic state system and broken detailed balance. PLOS Computational Biology, 14(1), e1005927. doi: 10.1371/journal.pcbi.1005927

About the author

Vedanth Ramji

Vedanth is currently a sophomore at APL Global School, Thoraipakkam, Tamil Nadu, India. He is passionate about science and technology and wants to work at the intersection of natural sciences, math and computer science to explore possible solutions to current day challenges. Programming is of great interest to Vedanth and he enjoys creating coding projects that are interdisciplinary in nature.