Author: Kedaar Rentachintala

BASIS Independent Silicon Valley

Abstract

Autism is one of the most prominent developmental disorders in the world today, affecting communication, social cues, learning, and other common tasks many people often take for granted. A lack of emotional awareness often leads to a more challenging time building relationships, achieving success in jobs and job interviews, and comprehending lessons (Ohl et al. 2017). Although researchers have conducted many experiments and analyses regarding facial emotions, few have delved deep into body language and associated body language cues that make up the basis of social and emotional awareness. To accomplish this task, we implemented the iMiGUE dataset, a concoction of over 350 post-tennis match interviews and subsequent player emotional states and micro gestures, or minuscule actions players performed during a duration of frames. After creating specialized images for each frame of a video and analyzing them through a ResNet50 architecture, we concluded that our model obtained favorable results for the surprise and sadness-correlated images. At the same time, it produced less accurate results for happy, fearful, uncomfortable, and focused images. To improve these results and make our product a universally effective tool, we plan on adding more training data and implementing various model architectures.

1. Introduction

Society has consistently maintained a stigma towards people with disabilities. However, for those on the more severe end of the autism spectrum, that stigma tends to be higher, primarily because autistic students and adults have poor social awareness and decision-making. A core component of autism is decreased social awareness and aversion to standard communication measures like eye contact and speaking face to face. Those social impediments have immense consequences throughout life. Children have difficult experiences making friends and building lasting relationships, while older students have trouble understanding lecture material and asking for help when needed. Adults with autism spectrum disorder (ASD) experience high unemployment and underemployment rates compared to adults with other disabilities or no disabilities (Ohl et al. 2017).

For families with access to therapy, therapy and general medical aid are expensive, with the most cost-effective form of cognitive behavioral therapy ranging from $7,300 to $9,330 per year (Crow et al. 2009). Furthermore, expensive therapists are tasked with holding therapy sessions for a small subset of the autistic population in the current treatment space. Meanwhile, underprivileged households rarely have access to accredited facilities with licensed therapists. The cost, travel time to and from a therapist’s office, and parental commitment in and out of the home that are associated with traditional behavioral therapy mechanisms are tremendous and avoidable.

In order to close this socioeconomic gap and allow those with autism and other social awareness-impeding conditions to achieve their goals, we developed a machine learning-based tool with a clear goal in mind: take in various common body language micro gestures (crossing hands, fidgeting) and identify the emotions (happy, sad, mad, disgusted) to which those gestures respond. We chose body language as an emotion prediction mechanism because little research exists regarding emotion detection through gestures rather than the face. We also decided to focus on younger children, given that younger students have an easier time overcoming their autistic tendencies and becoming more socially aware, a phenomenon commonly referred to as early intervention (Landa, 2018). The general approach to this project was to implement a ResNet50 architecture, training it with various images obtained from post-match tennis interviews. We used an existing model pulled from a Kaggle FER-2013 emotion detection model (Shakir, 2022) and modified it to suit our needs. We obtained our initial data of YouTube video links and frame durations from the iMiGUE dataset, as described by Liu et al. (2021). Our model obtained favorable results for the surprise and sadness-correlated images, while it produced less accurate results for happy, fearful, uncomfortable, and focused images.

To improve the accuracy of our ResNet50 model, we will train our model with more data from various settings and subjects and use different model frameworks like VGG-16. New settings would allow our project to become more commercially usable, given that new environments for training images would be more representative of a household or user’s location.

2. Background and Significance

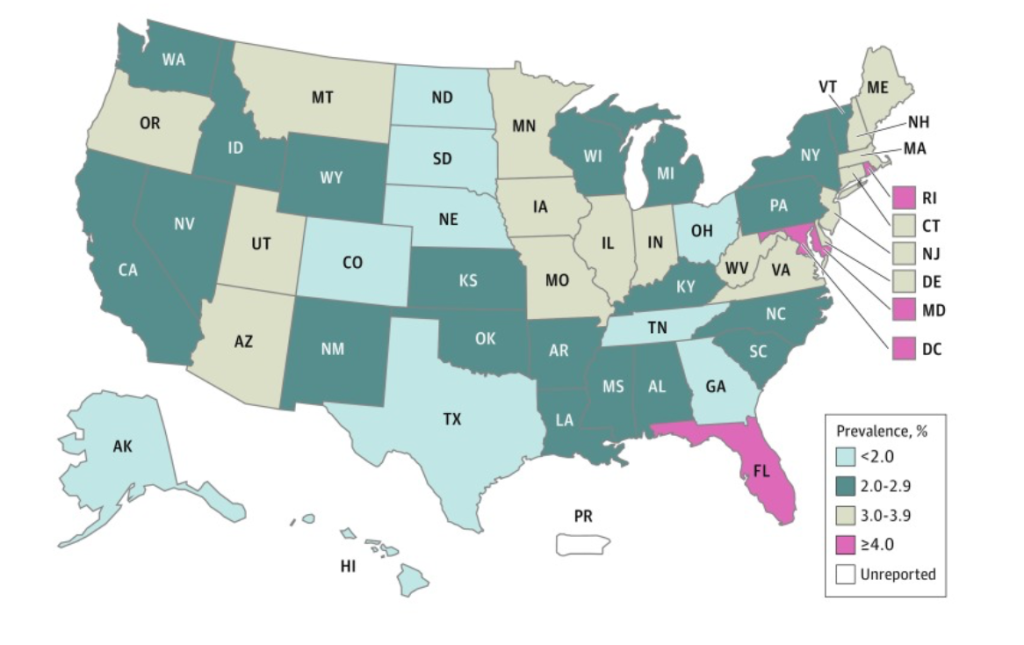

Autism spectrum disorder (ASD) is a complex brain condition caused by many factors ranging from the environment to genetics. The Centers for Disease Control and Prevention (CDC) stated that 1 in 68 children are born with autism, which is more common in males by a four-to-one ratio. As a condition with no diagnostic criteria, behavioral therapists and doctors examine patient actions and general behavioral trends to identify a potential cause (Amaral 2017). Characterized by poor social interaction and repetitive behavior, ASD has a complex and intriguing genetic makeup, with a convoluted familial inheritance pattern and nearly 1000 genes contributing to its rise (Ramaswami et al., 2018). As a neurodevelopmental disorder often referred to as a pervasive developmental disorder (PDD), ASD can also restrict and stereotype patterns of behaviors or interests (Faras et al. 2010). Using recent genomic techniques like microarray and next-generation sequencing (NGS), researchers have analyzed the genes in question to identify variance and clarify potential causes. Furthermore, many institutions have conducted exome sequencing analyses and general genome sequencing to identify mutations and create genomic datasets (Choi et al., 2021). Recently, researchers discovered over 36 de novo variants in the genetic makeup of individuals with autism, using Ingenuity Pathway Analysis software to construct networks , identify those autism-related genes, and find relationships among them, especially among six genetic networks (Kim et al. 2020).

Although researchers are still looking into official environmental causes, some researchers have found correlations between autism and parental age, assisted reproductive technology, nutrition, material infections and diseases, toxicants and general environmental chemicals, and medications (Gialloretti et al. 2019). German measles and similar diseases are related to the rise of ASD. However, influenza and other widely infectious diseases do not correlate. Furthermore, drugs used during the first and second trimesters of pregnancy, specifically serotonin reuptake inhibitors for depression treatment, are directly linked to autism. Researchers have investigated automobile pollution and cigarette smoke, among other infectious chemicals. However, more research must be conducted before making an official conclusion (Amaral 2017). As big data and molecular biology develop, scientists are continuously coming up with new ways to understand the genetic makeup of autistic patients to make better predictions about the origins of autism and its subsequent development (Gialloretti et al., 2019).

Autism may be chronic, but some treatments can weaken its impact. The most effective therapy method is the implementation of intensive behavioral interventions that improve the child’s functioning in question. These therapy sessions focus on language practice, social responsiveness, imitation, and etiquette. Examples of these therapy routines are the ABA (Applied Behavioral Analysis), and TEACCH (Treatment and Education of Autistic and Related Communication Handicapped Children) approaches (Faras et al. 2010). Furthermore, the UCLA Lovaas model and Early Start Denver Model (ESDM) have proven to improve children’s cognitive performance, language, and socially adaptive behavior. Furthermore, the drugs risperidone and aripiprazole demonstrate improvements in behavior like distress, aggression, self-injury, and hyperactivity but have more harm than benefits. Intervention is beneficial for children under 2, but more studies must occur before a conclusion is deemed legitimate (Warren et al., 2011).

2.1 Definitions

2.1.1 Data Augmentation – Data Augmentation is a critical tool for developing comprehensive deep learning classification-oriented models, especially when there is little data (Aryan and Unver, 2018). As its name suggests, data augmentation creates various copies of existing data by rotating, shifting, flipping, and zooming images to specified requirements. These copies form a more extensive dataset and help create a more robust model.

2.1.2 Gamify – Gamification refers to the intention of creating an application or tool that incorporates elements of gameplay. Examples of gamification include competition, a point system, and universal rules or regulations

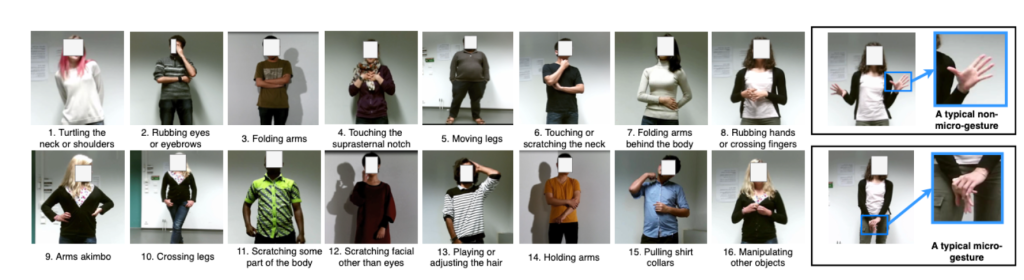

2.1.3 Micro Gesture – Per Liu et al. (2021), a micro gesture is a gesture that is unknowingly or subconsciously emitted. Rather than an intentional wave, symbolizing greeting or departure, a micro gesture would be covering the face in sadness or crossing the arms to portray a feeling of anger. Viewers can gather hidden emotions from the subjects that are revealed through these micro gestures, allowing that subject to give off genuine feelings and appear vulnerable.

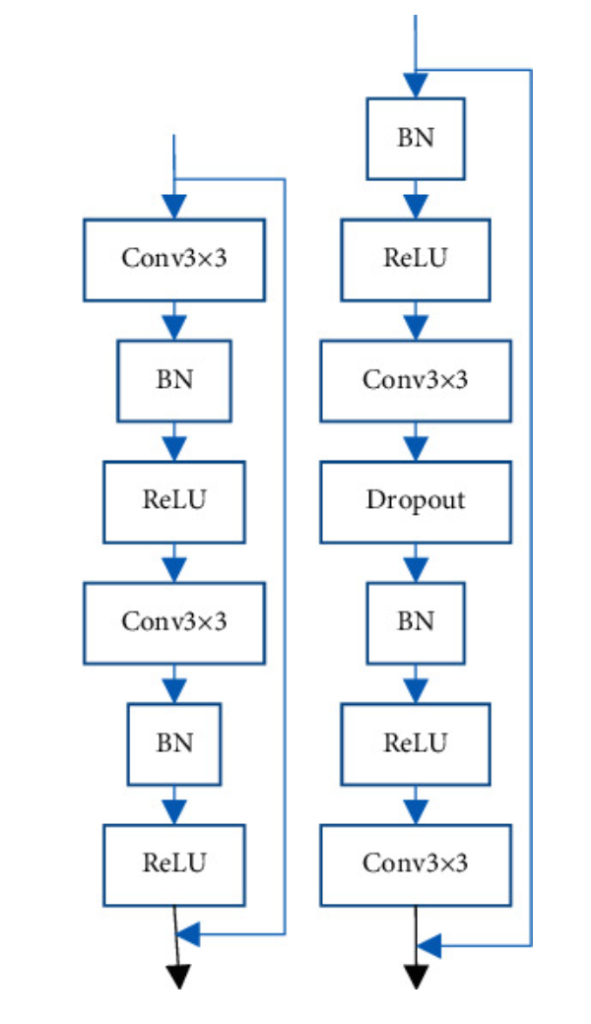

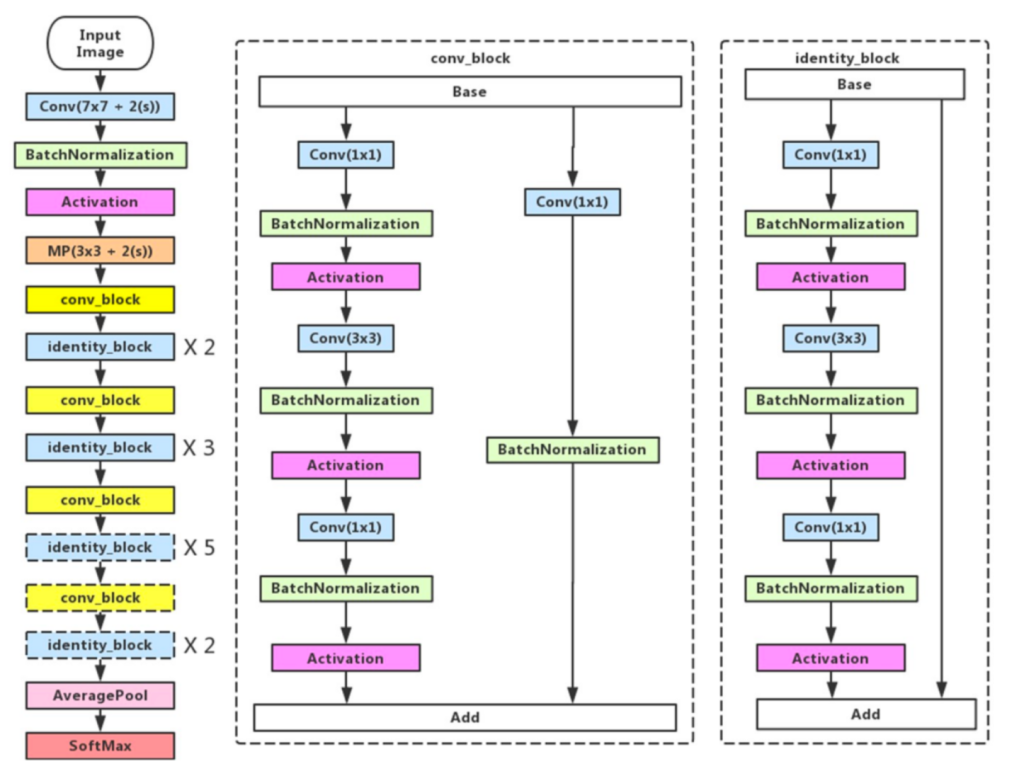

2.1.4 ResNet50 Architecture – As stated by Liu et al. (2021), deep neural networks fail to achieve their full potential due to vanishing gradient and saddle point problems. ResNet50 solves those issues by implementing a custom algorithm by which it can efficiently train models and eliminate the issues mentioned above. As Wen et al. (2019) state, ResCNN skips several blocks of convolutional layers and implements shortcuts to overcome vanishing or exploding gradients. Zaeemzadeh et al. (2021) prove that networks of nearly 1,000 layers increased accuracy using a ResNet architecture.

2.2 Relevant Project Resources

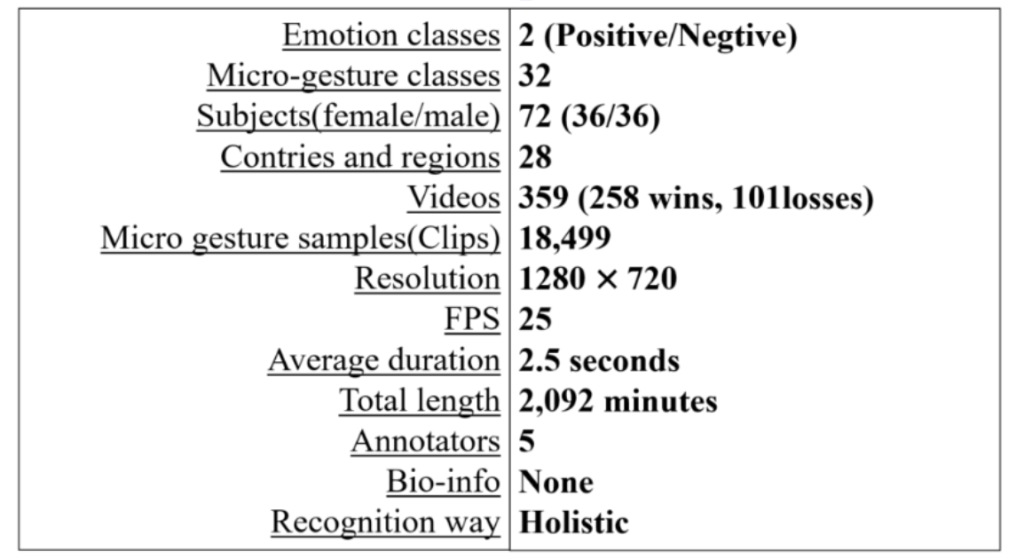

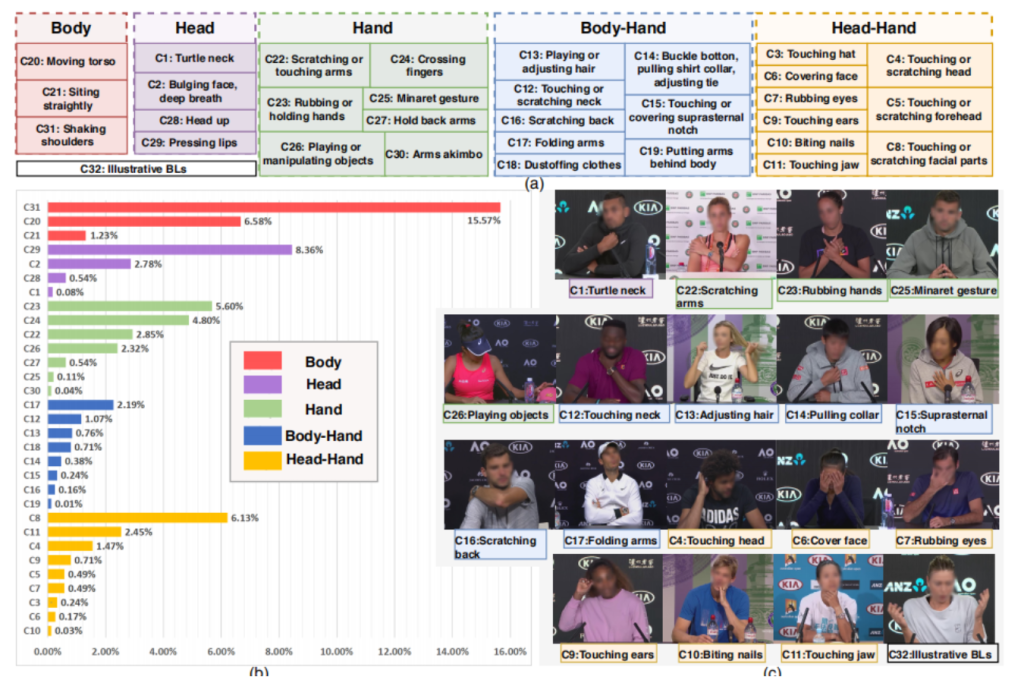

2.2.1 iMiGUE Dataset – Introduced by Liu et al. (2021), the iMiGUE dataset is an identity-free dataset that focuses its work on micro gestures. iMiGUE is unique because it focuses on nonverbal cues without facial emotions while existing emotion detection focuses on the face. The dataset contains over 350 YouTube tennis post-match links and various start and end frame stats labeled by a particular class. For instance, the dataset would label frames 250 to 300 as certain behaviors. We then classified those behaviors into the certain emotions they best represented. For instance, we consider crossing arms an angry pose, while covering the face would be sad.

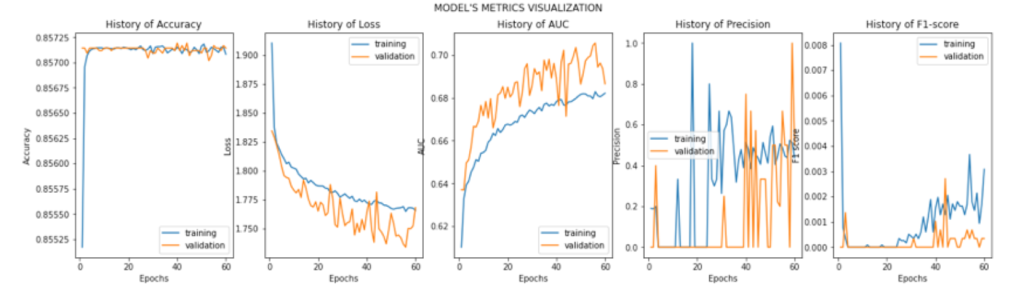

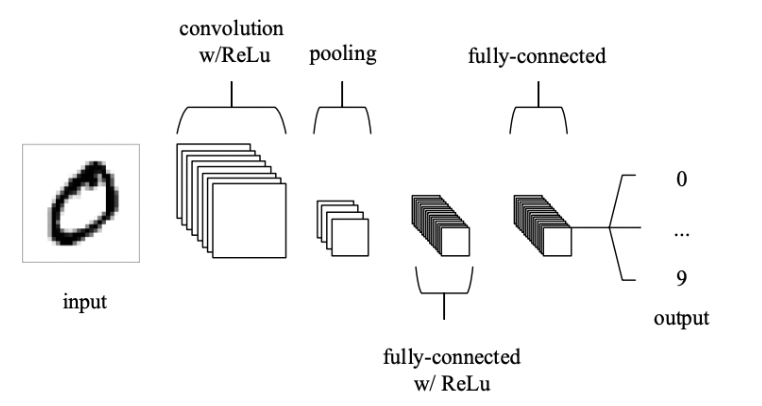

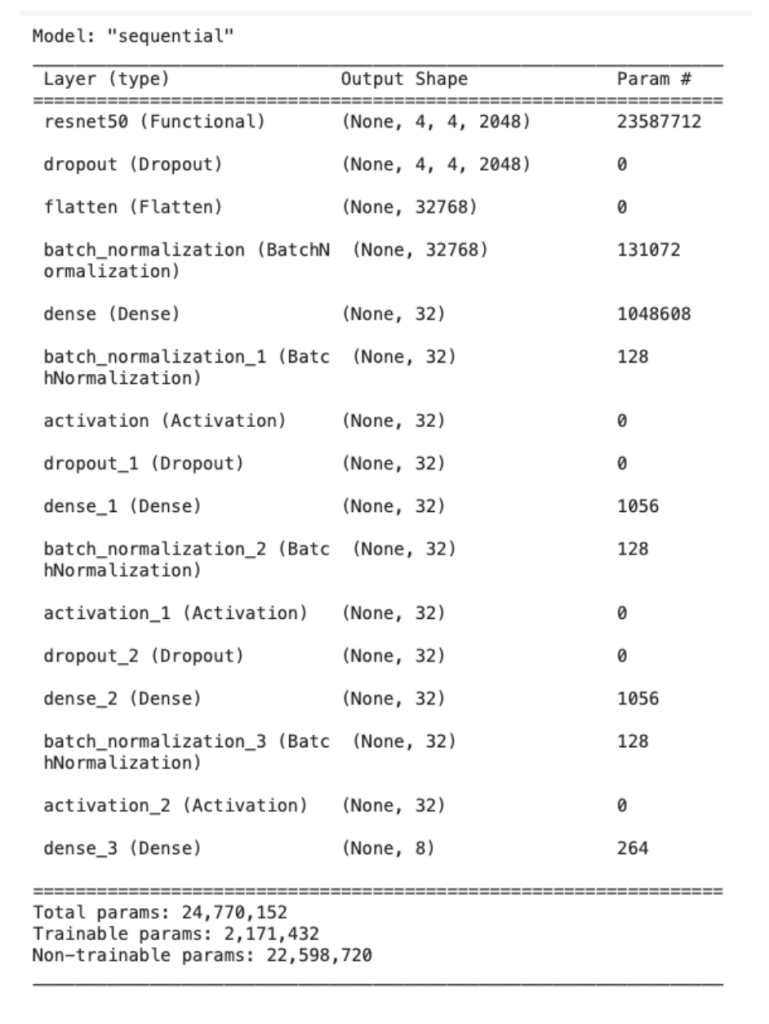

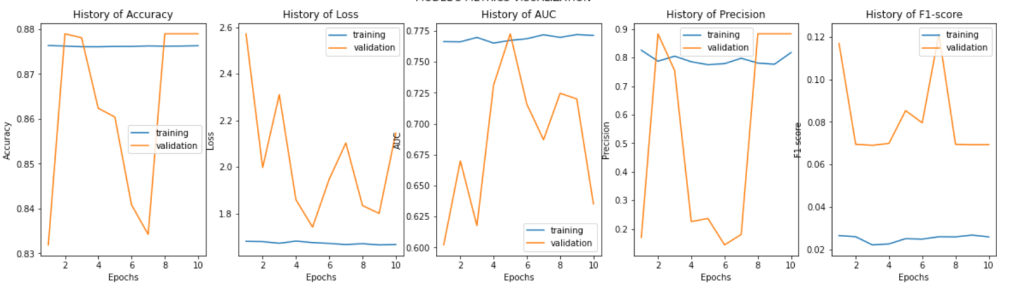

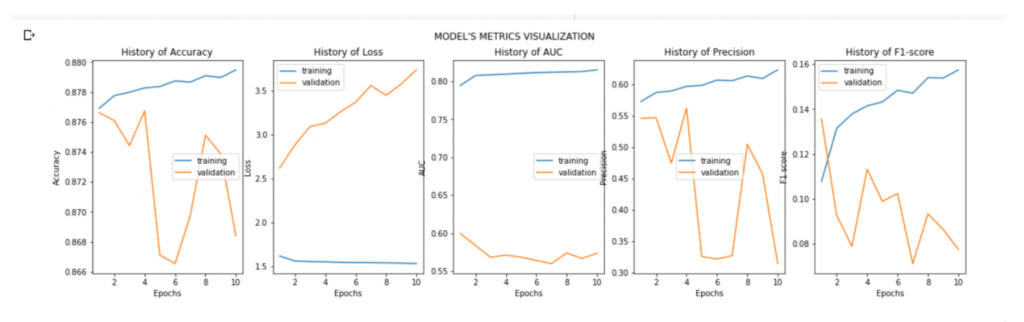

2.2.2 Kaggle FER-2013 Model – The model we implemented was developed for a Kaggle dataset named FER-213. The FER-2013 dataset contained seven emotions (angry, disgusted, fearful, happy, neutral, sad, surprised), with each image saved as a 48×48 pixel grayscale item. The dataset contains over 35,685 examples of images. Our model was a take on (Shakir, 2022) and their detection solution. The model fed in the data, implemented data augmentation on a training and validation set, froze the last four layers, created the model, compiled and trained the data, and displayed the output, basing its results on the accuracy, precision, recall, AUC, and f1 score. The output is displayed below.

3. Related Work

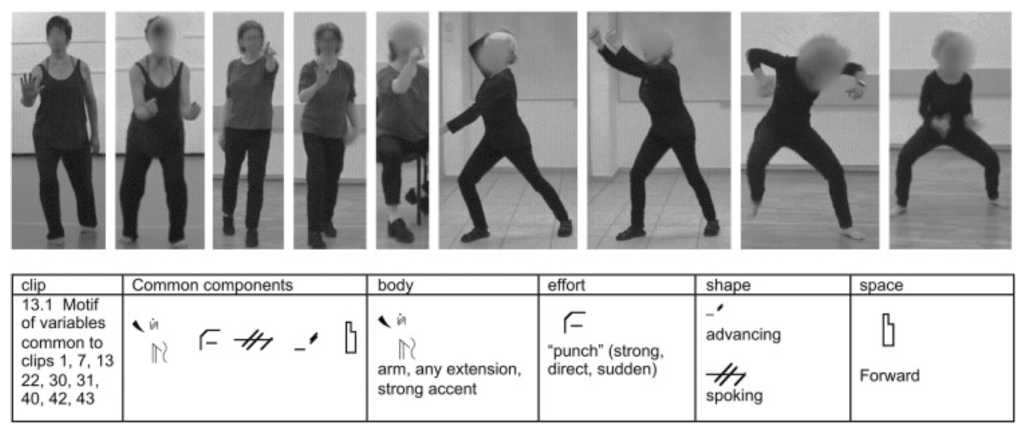

Researchers have begun to focus on body language recognition by creating custom datasets and implementing tools like Laban Movement Analysis to identify specific gestures. Laban Movement Analysis (LMA), as summarized by Tsachor et al. (2019), is an internationally used system for observing movement. LMA identifies various movement variables often used for statistical analysis and general therapeutics. Tsachor et al. analyzed dance/movement therapy (DMT) and its effects on psychological wellbeing, a niche but relevant example of the analysis technique in a real-world scenario. Dance is one of the many applications of body language detection, but understanding dance emotion creates a new experience for viewers replicated in other industries and hobbies.

Accordingly, therapy relies on emotion, but facial features are not the only parts of the body that show emotion. In order to get an accurate, holistic view of any human emotion, AI must analyze spontaneous gestures, also known as micro gestures. Chen et al. (2018) prove this through their project, implementing spontaneous gestures and deep learning to analyze stress levels. As of mid-2022, there are limited resources regarding the study of gestures and subsequent identification by AI/ML models. This study uses a Spontaneous Micro Gesture (SMG) dataset of nearly 4,000 labeled gestures. Chen et al. (2018) found that many individuals use micro gestures to add a new layer to their expression that further emphasizes their feelings.

The group proposes a framework to encode gestures to a Bayesian network and help predict emotion states. Their results show that most participants naturally perform micro gestures to relieve their mental fatigue. Additionally, over 40 participants were interviewed extensively and put through a story-telling game with two emotional states. In order to contrast these story-telling game participants with ordinary individuals, the group also conducted a short test with ordinary people and trained orators. As of its publication, it was the first gesture dataset and demonstrated immense progress as a gesture prediction tool, especially for hidden emotions.

Adding onto the findings of Chen et al. (2018), Automated Recognition of Bodily Expression of Emotion(ARBEE) identifies the effectiveness of Laban Movement Analysis (LMA) in identifying bodily expressions. It also compares other methods and studies and analyzes two data sets, body skeletons, and a raw image. After analyzing the data, the system intertwines emotional detection and body language and forms a holistic model of what the character in question is feeling. The study also cites a variety of applications, ranging from personal assistants to social and police robots, all cases that require an immense understanding of the human form and human feelings. The ARBEE technology is a pioneering mechanism through which modern tools relying on social and emotional recognition can operate (Luo et al., 2020).

Schindler et al. (2008) tackle the lack of study of body gestures. Most people perceive emotion as a smile, frown, or other facial features rather than gestures or body movements. This group created a computational model of the visual cortex to construct a set of neural detectors that can find seven emotional states from static pictures of poses. The group created a visual hierarchy of models which can discriminate seven emotional states from static views of body poses. The researchers also evaluated the model on human test subjects.

Similarly, Aristidou et al. (2015) found that with the increased availability of motion databases and advancements in analyzing that motion, utilizing data has been more accessible than ever before. Their paper analyzes Russell’s circumplex model and Laban Movement Analysis (LMA) to extract LMA components and index and classify dance movements and the related emotions those dancers convey. The results of their experiments show that, with LMA extracting and indexing various movements and classifying them by emotion, researchers can learn how and why people express emotion, all while working in parallel with other motion tracking applications and classification tools.

Liu et al. (2021) elaborate on dance emotion classification through the iMiGUE dataset, a micro gesture dataset for emotion analysis. This dataset is unique because it focuses entirely on nonverbal features, different from the face’s original and more common scope. Each video in the dataset has specified video frames during which a subject expresses a particular emotion and performs a specific action with their hands and head. Additionally, to limit imbalance in the dataset samples, the project uses unsupervised learning to capture the micro gestures and investigate the micro gestures, enhancing the accessibility of emotion AI. By identifying the location of those gestures and classifying them into 1-32 classes of emotion and action, the researchers could create a holistic perspective of body language during interviews and press conferences of significant athletes.

Analyzing all the components of a successful video and how viewers react to a creator may be used to identify how viral a video might become (Biel et al., 2022). Numerous researchers have picked an industry or a situation in which someone shows emotion nonverbally, but Biel et al. (2022) take their analysis to a different dimension: that of social media. Their project details social media, particularly vlogging, to identify personality and general characteristics that identify these vloggers, mainly relying on nonverbal cues and audiovisual analysis. The tool will significantly impact consumers because the personality impressions are crowdsourced and do not rely on a computer model. The model can make educated predictions regarding future content and view reactions by analyzing these cues.

Dael et al. (2012) take a more general approach. Instead of tackling the face and its movements, the group tackles body posture by implementing the Body Action and Posture (BAP) coding system to examine the types and frequency of various body movements. The project asked ten actors to recreate 12 emotions and investigated whether these patterns support emotion theory, bidimensional theory, and componential appraisal theory. The study revealed that body movement patterns occur when creating various emotions, leading to a clear cut path for further research and a potential AI model. The study also found that although a few emotions were expressed by just one pattern, the heavy majority of emotions were represented in various forms, allowing for a wide variety of emotion differentiation and potential research in the minutia of expression, primarily action readiness, and eagerness. Although much additional work is required in the field, using the frameworks listed in this group’s research will allow for a more calculated approach to experimentation and hypothesis testing.

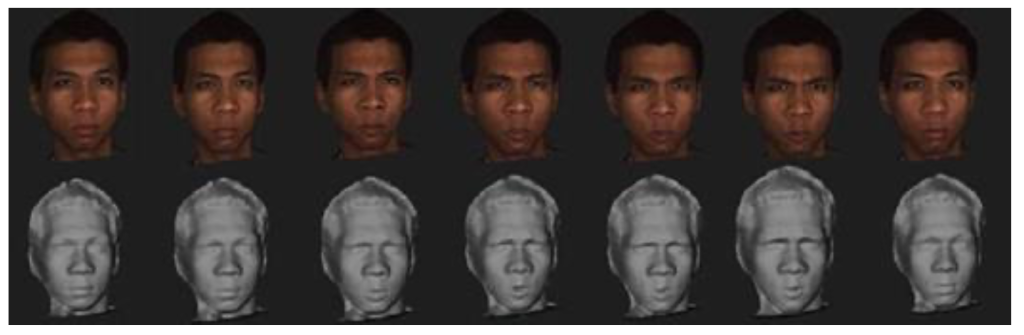

Yin et al. (2008) take emotion detection to the next level through in-depth analysis in multimodal sensing. With most facial emotion analysis taking place with a static, 2D photograph, research leaves out many details from the classification process. In order to improve analysis results and produce a holistic view of the head, multimodal sensing and the BU-3DFE dataset produced from comprehensive data scans can be implemented, pioneering a wholly revamped study of emotion and expression in humans. The approach showed an 83% correct recognition rate in classifying six expressions (happiness, disgust, fear, anger, surprise, sadness). To reduce bias, the database also contains over 100 subjects ranging from 18 to 70 years old and from various backgrounds (White, Black, East-Asian, Middle-East Asian, Indian, Hispanic).

On emotion detection, Benitez-Quiroz et al. (2016) propose a new algorithm to analyze and annotate millions of facial expressions. In order to accurately identify the face and the emotions it displays, there must be annotated databases. However, those databases take far too long to create manually. Their project, titled EmotioNet, identifies facial expressions by implementing a unique computer vision system that annotates a database of over 1 million images from the internet. Furthermore, the program downloads millions of images with emotional keywords and annotates them accordingly. This project will be instrumental in any analysis of facial emotions in the future because of its sheer scale and accuracy. By annotating images, users of their dataset can get a good view of the face and train models more accurately than ever before.

Along with EmotioNet, the ImageNet training set is a massive image database that researchers can use for object detection and image classification on an enormous scale. Krizhevsky et al. (2012) trained a convolutional neural network to classify 1.3 million images in the LSVRC-2010 ImageNet training data set into exactly 1000 classes. They received error rates of 39.7% and 18.9%, better than other models publicized at the time. The network used 60 million parameters and 500,000 neurons with five convolutional layers, some of which were followed by max-pooling layers and two layers with a final 1000-way softmax. They used non-saturating neurons and an efficient GPU implementation of the networks. To reduce overfitting, they used a regularization model. The whole system proved highly effective at classification and is one of many deep learning models with immense success at image classification, the results of which can extend to facial emotion and body language emotion.

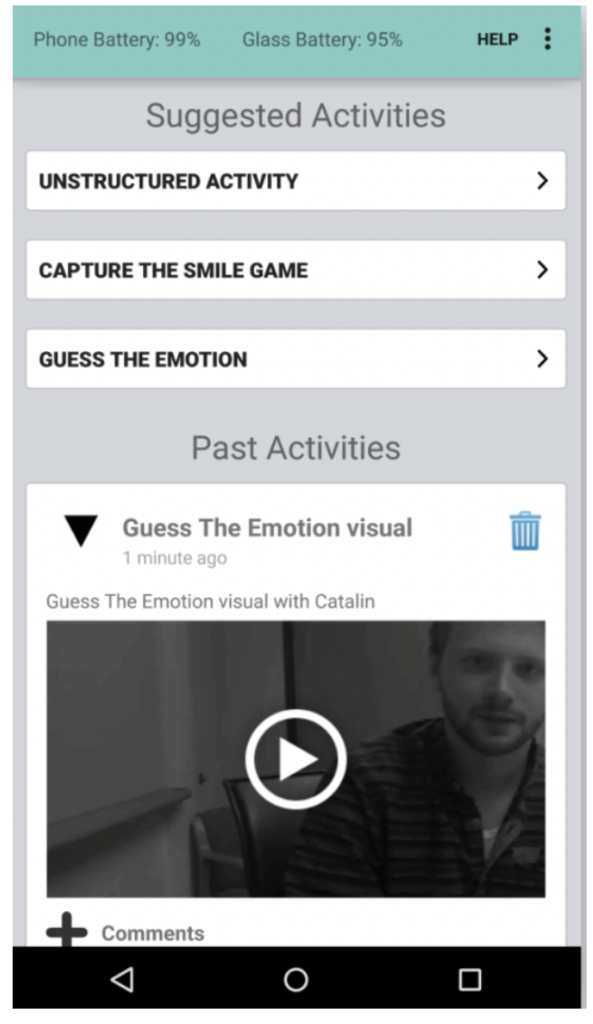

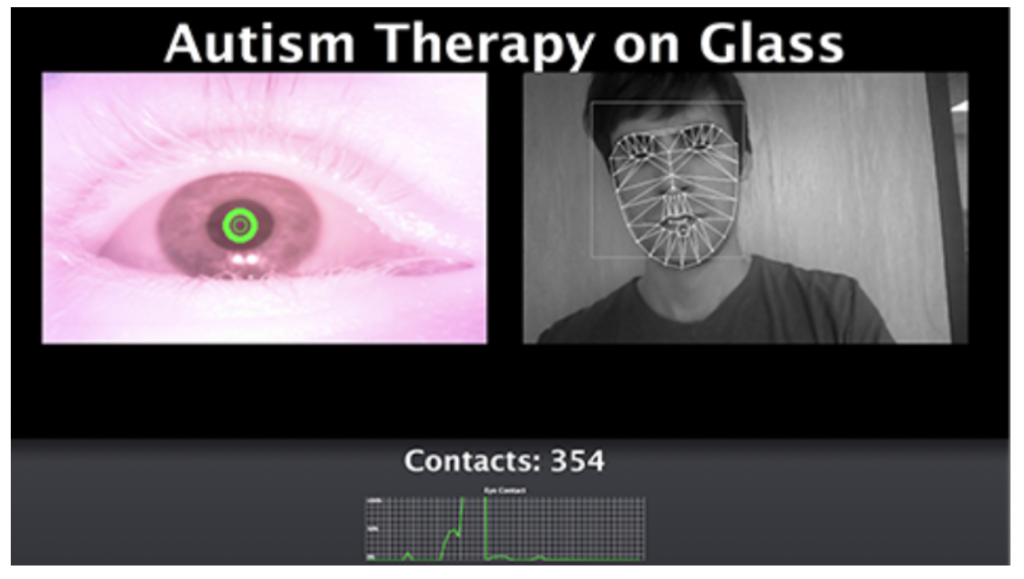

Many researchers have written papers detailing their deep learning endeavors in body language prediction and the creation of vast datasets. However, Stanford University recently funded an Autism Glass project to see the effect of all this facial emotion analysis in the real world. Various researchers developed a mobile application to gamify, improving emotion recognition and social awareness in autistic children. The project implemented Google Glass, a hardware tool that uses computer vision and other algorithms to create user immersive experiences. Parents would switch on the camera and point at various people, all displaying different emotions. The child would then guess which emotion was correct. If they were right, they received a prize and a verbal appreciation. If they did not get the answer right, they kept trying until they did. Parents considered the project an immense success, leading the researchers toward more studies, development, and public outreach. Although more research is needed before researchers implement a full-scale in the mainstream market, initial success has led to a large market and target audience for the tool.

4. Methods and Analysis

We must understand the vanishing gradient problem to understand our use of a Residual Neural Network or ResNet instead of a classic deep learning network. As stated by Liu et al. (2021), deep neural networks achieve poor results or even training failure due to vanishing gradient and saddle point problems. Jha et al. (2021) elaborate on these issues by pointing out that advanced deep learning techniques were limited in their use because large datasets were relatively rare. However, with the increasing availability of these datasets, many people decided to implement deeper neural networks to boost their model performance. To their dismay, the performance worsened due to the vanishing gradient problem. As more layers join the system, gradients of the loss function approach zero, making the model increasingly difficult to train (Wang, 2019). We implemented a residual neural network to achieve our deep learning goals and maximize our accuracy. As Wen et al. (2019) state, a residual neural network or ResCNN solves the problems posed by general deep learning. Classic deep learning models face the vanishing gradient problem paired with backpropagation, but ResCNN skips several blocks of convolutional layers and implements shortcuts to overcome exploding gradients. Zaeemzadeh et al. (2021) discuss what makes a ResNet model so accurate. Networks of more than 1,000 layers saw significant gains in their overall accuracy through residual neural networks.

Furthermore, ResNet/ResCNN leads to the preservation of the norm of the gradient and stable backpropagation, optimizing accuracy. They analyze ResNet through skip connections and create new theoretical results on the advantages of those connections in a modern ResNet system. Although more research is continuously revising the highest-performing Residual Neural Networks, ResNet has opened a door for deep learning to become accurate and feasible for even the most extensive datasets.

Because of the ResNet/ResCNN’s efficacy and efficiency, researchers have already implemented it in various endeavors. McAllister et al. (2018) combined supervised machine learning and deep residual neural networks to log food intake and maintain sustainable, healthy lifestyles for the obese, a population more likely to endure chronic conditions like diabetes, heart disease, sleep apnea, and cancer. Their research concluded that deep CNN’s were remarkably accurate and that the Food-101 dataset they had created would work in a wide variety of food image classification tasks. Panahi et al. (2022) took inspiration from a current event and implemented their version of a residual neural network on chest x-rays to identify COVID-19 patients. They substantiated a claim that machine learning models paired with radiography imagery can reliably predict lung-related diseases, from pneumonia to COVID-19.

With the benefits of ResNet, we began exploring suitable images to train our model. The iMiGUE dataset developed by Liu et al. (2021) best suited our needs. iMiGUE, an acronym for “identify-free video dataset for micro gesture understanding and emotion analysis,” focuses on nonverbal gestures prompted by unintentional behaviors rather than gestures given purposefully for effective communication cues. Accurate emotional detection algorithms must pick up these unintentional actions to effectively gauge a person’s inner feelings and holistically identify emotions. Liu et al. (2021) provide more context to understand these two types of gestures better. Existing studies and datasets focus on illustrative gestures (like waving hands to symbolize leaving or greeting) rather than gestures like covering the head to indicate disappointment or sadness.

Additionally, similar studies ended up inadvertently causing participants in the studies to suppress their emotions, particularly negative ones, during their interactions with researchers, as many opted not to express genuine emotion because of existing social norms or a lack of comfort with the study. Furthermore, existing data provides a general overview of behaviors rather than an in-depth analysis of those behaviors to identify hidden emotions. iMiGUE provides revolutionary new data to combat existing data’s flaws.

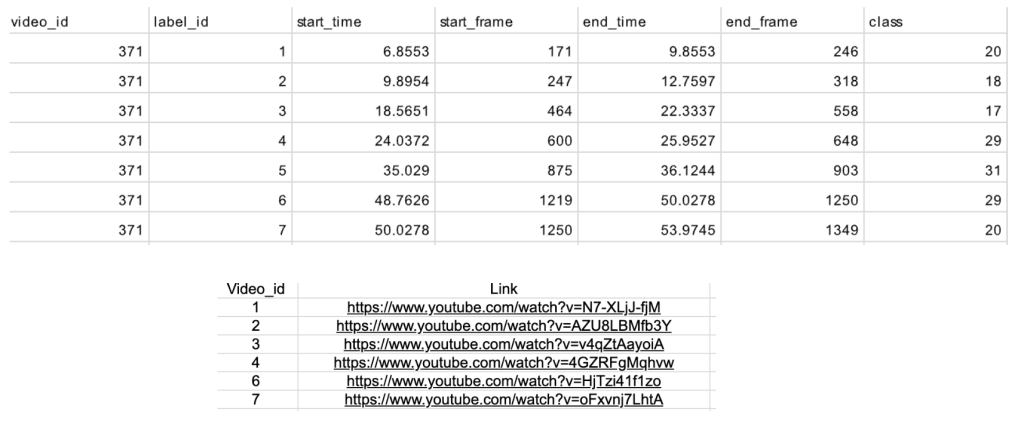

The figure above shows that the iMiGUE dataset analyses over 450 tennis post-match interviews and labels them according to 32 classes. Each class demonstrates a different action that can correspond to a list of emotions: angry, fear, focused, happy, neutral, sad, surprised, and uncomfortable. See the figure below for an example.

The figure above shows that a tennis player has crossed her hands, a clear symbol of class 17. We then use that information to classify the image as an example of anger, primarily because a critical characteristic of being upset or angry is a pair of visibly crossed hands.

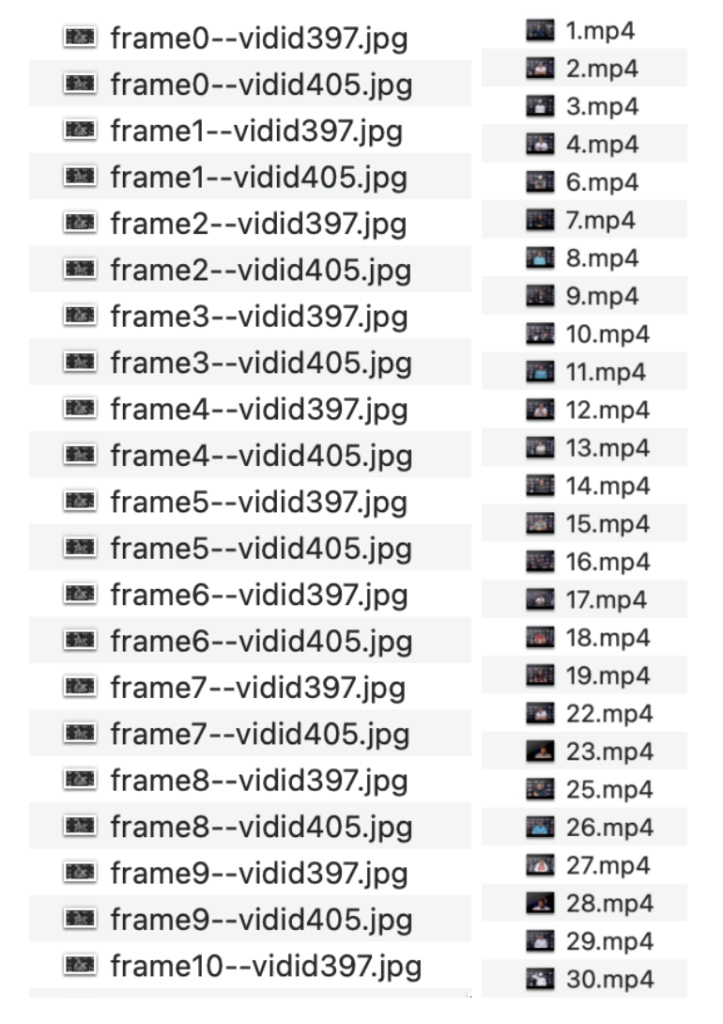

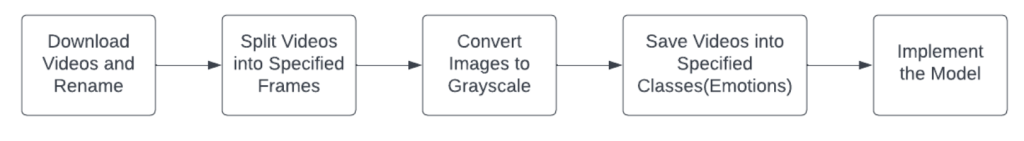

However, the iMiGUE dataset is not an image dataset. Instead, it relies on video classification and analysis from YouTube post-match links, meaning that in order to fit the dataset for our image classification needs, we had to create a custom script to decode each frame of each video and use iMiGUE’s in-built frame labels to name each frame according to what class it symbolized. We implemented PyTube to download each video and immediately renamed it to its video id as stated by iMiGUE’s dataset. We then looked through iMiGUE’s frame database sheet and read each video id column, start frame, and end frame. The frames in between the start and end frames were the frames we would write to an emotion’s folder. We iterated through each set of frames and designated classes to create the 48,000 converted grayscale images we used to fit our model. The figures below show sample iMiGUE data.

Our program labels each image through a particular naming convention. The frame number and video ID are displayed, all while the algorithm sorts the images into the emotion folders (happy, sad, angry, focused, uncomfortable, neutral, fear, and surprise.) See a snapshot of the data below.

With the data identified, we began the implementation of our model.

- We first generated new image data through data augmentation. We ran the augmentation script on the training and validation data (splitting the data into 20% validation and 80% training.) Each image was fed as a 100 by 100 NumPy array.

- We then imported the model from Tensorflow. A detailed overview of our weights exists below.

3. After building the model, we compiled it using the Adam optimizer, a gradient-based optimization system based on adaptive estimation of lower-order moments per Kingma et al. (2014).

5. Analysis and Results

We evaluated the model using accuracy, precision, recall, AUC, and fl score through 10 epochs (as more epochs led to minor improvement). After multiple trials, it appeared clear the model was overfitting, as validation loss increased as validation accuracy decreased. The model appeared to classify training data fairly accurately while struggling to identify emotions and correctly predict information from the validation data. A particular flaw was apparent from the beginning of the model-building and data collection. Even though 32 classes effectively identified various behaviors a particular player exhibited during an interview, it could not have been entirely accurate. The above example described crossing hands as a symbol of anger or unhappiness. However, it could also mean fear or even discomfort if the situation was in an unfavorable environment. Assigning an emotion to behavior will not lead to entirely accurate predictions but rather an educated guess. A case study is examined below.

The two preceding images are virtually identical. Their images were taken on different days at different zooming levels but captured the inherent flaw of this experiment: the lack of context. A tennis player interlocks her fingers and stares straight into a reporter’s eyes with no emotion. However, one image is classified as “fearful” while the algorithm classifies the other as “angry.” Body language is harder to identify and draw conclusions from when compared to facial emotions. A happy face is characterized by a smile or similar action, while a happy person is represented in various ways by body language. These ways often overlap with other emotions, leading to further confusion. However, an inability to distinguish one of these images from another decreased the accuracy of the results but still led to a peak validation accuracy of 87.7% and 87.8% for 10,000 and 49,000 images, respectively.

Furthermore, happy, fearful, uncomfortable, and focused images were more challenging to classify than surprise and sadness because they contained overlapping emotional gestures like interlocking fingers or crossed hands. In contrast, surprise and sadness often had clear signs like covering the face with hands. More data based on real-world scenarios will make this model run more effectively in scenarios besides a still frame, all while preventing overfitting and increasing the likelihood of commercial use.

6. Future Work

So far, this project offers limited insight into analyzing complex real-world scenarios, given that we only trained our model with still images from an unchanging setting. Tennis players giving post-match interviews often sit at a podium rather than on the move or in a natural habitat. We will incorporate more data sources from various scenes and subjects to solve those issues and improve this project. The more data our model is trained on, its prediction mechanisms can be more extensive. Additionally, our data had a flaw of not matching emotions to gestures accurately. Many behaviors exhibited numerous emotions and required context to come up with an effective conclusion. Therefore, combining facial emotions and body language can help provide a holistic view of a person’s emotional state, helping highly neurodivergent individuals learn how to identify those hidden states accurately and not learn incorrect correlations between a micro gesture and a potential emotion that the gesture is exhibiting.

Furthermore, ResNet50 is undoubtedly not the only model we can implement for our classification needs. VGG-16, Inceptionv3, and EfficientNet all have unique benefits that can outperform ResNet50. However, more experimentation is needed before we can identify a clear conclusion. On the topic of model architectures, we must discuss video classification. The iMiGUE dataset was a video dataset that required extensive image slicing per frame and tedious labeling. Instead of splitting the video into frames, we can experiment with analyzing the videos themselves and conducting video classification. This video classification may make emotion recognition more accurate because it provides context and developments leading to a potential micro gesture, including potential facial signals and emotional expressions.

After more testing and analysis, we plan to make this product a commercial tool by developing a web application. Like the Autism Glass Project, we plan on making this product a gamified application that allows highly neurodivergent individuals, particularly children, to guess which emotions their parents are making. By making the experience a fun and exciting activity, children are more likely to play the game and learn the emotions at hand, enhancing their understanding of social cues and better preparing them for communication in the real world. Gamification has proven effective in real-world settings, particularly in medical endeavors. Sardi et al. (2017) write that by applying game mechanisms to non-game contexts, individuals are more likely to experience cognitive and motivational benefits. Although more research is required to make gamification a long-lasting beneficial technique, it has yielded immense promise.

7. Conclusion

Although researchers are making technological breakthroughs in autism, there is a clear need for a tool that can enhance the emotional development of younger individuals. There has been some progress in body language sensing and movement analysis. As shown by Schindler et al. (2008) and others, but not enough research has been done linking emotions to micro gestures. Autistic individuals lack emotional recognition capabilities and have hard times analyzing social cues, proving that a tool that can take people’s movements and teach what emotions those movements indicate would be life-changing for users. Autistic children who learn emotion detection early will perform better in job interviews, relationship building, school, and numerous other situations previously hindered by autism’s characteristic symptoms (Morgan et al. 2014). Furthermore, this tool will be instrumental in treating autism in underserved communities. Rather than paying for extensive therapy that can exceed tens of thousands of dollars, autistic children can improve their social skills in the comfort of their families and home (Horlin et al., 2014).

We wanted our tool to be convenient for autistic users, so we focused on identifying common emotions in a casual, seated setting. For autistic individuals, identifying emotions relies heavily on facial signals and body language. Thus, we incorporated a developed and public ResNet50 model and trained it on over 49,000 images, each of which was a frame of over 490 post-match interviews. Our algorithm sorted each image into an associated folder, with each folder named after an emotion. To make our project feasible and practical, we picked eight common emotions: happy, angry, sad, surprised, focused, uncomfortable, neutral, and fearful. Our experiments proved that happy, fearful, uncomfortable, and focused images were more challenging to classify than surprise and sadness due to the overlapping nature of expression. Interlocking hands, for instance, may show fear, focus, or anger. Hence, the lack of new data with different behavioral expressions for each emotion tainted our results and lowered our accuracy. To solve our low accuracy issue, we are training our model on more data from different vantage points and settings, all while ensuring that the behaviors expressed by the subjects in the data are distinguishable.

The ultimate goal of our project, given resources, time, and additional knowledge, would be to build a production-ready application that implements a game experience for autistic children. Each child guesses emotions and earns points depending on their score, winning prizes along the way. The prizes, paired with the fun of guessing the emotions of a family member or friend, will motivate the children to keep building their knowledge base. Ultimately, our project attempts to contribute to the world and cultivate a positive community in which computer vision can effectively recognize emotions and improve the long-term and short-term lives of autistic children, opening doors for them that were previously immensely difficult to reach. By detecting emotions and teaching autistic children about social intelligence and the importance of communication, our project will help those children reach their fullest potential.

References

Ohl, A., Grice Sheff, M., Small, S., Nguyen, J., Paskor, K., & Zanjirian, A. (2017). Predictors of employment status among adults with Autism Spectrum Disorder. Work (Reading, Mass.), 56(2), 345–355. https://doi.org/10.3233/WOR-172492

Crow, S. J., Mitchell, J. E., Crosby, R. D., Swanson, S. A., Wonderlich, S., & Lancanster, K. (2009). The cost effectiveness of cognitive behavioral therapy for bulimia nervosa delivered via telemedicine versus face-to-face. Behaviour research and therapy, 47(6), 451–453. https://doi.org/10.1016/j.brat.2009.02.006

Landa R. J. (2018). Efficacy of early interventions for infants and young children with, and at risk for, autism spectrum disorders. International review of psychiatry (Abingdon, England), 30(1), 25–39. https://doi.org/10.1080/09540261.2018.1432574

Sambare, M. (2020). FER-2013, Version 1. Retrieved September 1, 2022 from https://www.kaggle.com/datasets/msambare/fer2013

Shakir, Y. (2021). Emotion Recognition with ResNet50, Version 1, Retrieved September 1, 2022 from https://www.kaggle.com/code/yasserhessein/emotion-recognition-with-resnet50

Amaral D. G. (2017). Examining the Causes of Autism. Cerebrum : the Dana forum on brain science, 2017, cer-01-17.

Ramaswami, G., & Geschwind, D. H. (2018). Genetics of autism spectrum disorder. Handbook of clinical neurology, 147, 321–329. https://doi.org/10.1016/B978-0-444-63233-3.00021-X

Faras, H., Al Ateeqi, N., & Tidmarsh, L. (2010). Autism spectrum disorders. Annals of Saudi medicine, 30(4), 295–300. https://doi.org/10.4103/0256-4947.65261

Choi, L., & An, J. Y. (2021). Genetic architecture of autism spectrum disorder: Lessons from large-scale genomic studies. Neuroscience and biobehavioral reviews, 128, 244–257. https://doi.org/10.1016/j.neubiorev.2021.06.028

Kim, N., Kim, K. H., Lim, W. J., Kim, J., Kim, S. A., & Yoo, H. J. (2020). Whole Exome Sequencing Identifies Novel De Novo Variants Interacting with Six Gene Networks in Autism Spectrum Disorder. Genes, 12(1), 1. https://doi.org/10.3390/genes12010001

Emberti Gialloreti, L., Mazzone, L., Benvenuto, A., Fasano, A., Alcon, A. G., Kraneveld, A., Moavero, R., Raz, R., Riccio, M. P., Siracusano, M., Zachor, D. A., Marini, M., & Curatolo, P. (2019). Risk and Protective Environmental Factors Associated with Autism Spectrum Disorder: Evidence-Based Principles and Recommendations. Journal of clinical medicine, 8(2), 217. https://doi.org/10.3390/jcm8020217

Xu, G., Strathearn, L., Liu, B., O’Brien, M., Kopelman, T. G., Zhu, J., Snetselaar, L. G., & Bao, W. (2019). Prevalence and Treatment Patterns of Autism Spectrum Disorder in the United States, 2016. JAMA pediatrics, 173(2), 153–159. https://doi.org/10.1001/jamapediatrics.2018.4208

Warren, Z., Veenstra-VanderWeele, J., Stone, W., Bruzek, J. L., Nahmias, A. S., Foss-Feig, J. H., Jerome, R. N., Krishnaswami, S., Sathe, N. A., Glasser, A. M., Surawicz, T., & McPheeters, M. L. (2011). Therapies for Children With Autism Spectrum Disorders. Agency for Healthcare Research and Quality (US).

Liu, M., Chen, L., Du, X., Jin, L., & Shang, M. (2021). Activated Gradients for Deep Neural Networks. IEEE transactions on neural networks and learning systems, PP, 10.1109/TNNLS.2021.3106044. Advance online publication. https://doi.org/10.1109/TNNLS.2021.3106044

Wen, L., Dong, Y., & Gao, L. (2019). A new ensemble residual convolutional neural network for remaining useful life estimation. Mathematical biosciences and engineering : MBE, 16(2), 862–880. https://doi.org/10.3934/mbe.2019040

Zaeemzadeh, A., Rahnavard, N., & Shah, M. (2021). Norm-Preservation: Why Residual Networks Can Become Extremely Deep?. IEEE transactions on pattern analysis and machine intelligence, 43(11), 3980–3990. https://doi.org/10.1109/TPAMI.2020.2990339

Wang, H., Li, K., & Xu, C. (2022). A New Generation of ResNet Model Based on Artificial Intelligence and Few Data Driven and Its Construction in Image Recognition Model. Computational intelligence and neuroscience, 2022, 5976155. https://doi.org/10.1155/2022/5976155

Tsachor, R. P., & Shafir, T. (2019). How Shall I Count the Ways? A Method for Quantifying the Qualitative Aspects of Unscripted Movement With Laban Movement Analysis. Frontiers in psychology, 10, 572. https://doi.org/10.3389/fpsyg.2019.00572

H. Chen, X. Liu, X. Li, H. Shi and G. Zhao, “Analyze Spontaneous Gestures for Emotional Stress State Recognition: A Micro-gesture Dataset and Analysis with Deep Learning,” 2019 14th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2019), 2019, pp. 1-8, doi: 10.1109/FG.2019.8756513.

Luo, Y., Ye, J., Adams, R. B., Jr, Li, J., Newman, M. G., & Wang, J. Z. (2020). ARBEE: Towards Automated Recognition of Bodily Expression of Emotion in the Wild. International journal of computer vision, 128(1), 1–25. https://doi.org/10.1007/s11263-019-01215-y

Schindler, K., Van Gool, L., & de Gelder, B. (2008). Recognizing emotions expressed by body pose: a biologically inspired neural model. Neural networks : the official journal of the International Neural Network Society, 21(9), 1238–1246. https://doi.org/10.1016/j.neunet.2008.05.003

Aristidou, Andreas & Charalambous, Panayiotis & Chrysanthou, Yiorgos. (2015). Emotion Analysis and Classification: Understanding the Performers’ Emotions Using the LMA Entities. Computer Graphics Forum. 34. 10.1111/cgf.12598.

J. -I. Biel and D. Gatica-Perez, “The YouTube Lens: Crowdsourced Personality Impressions and Audiovisual Analysis of Vlogs,” in IEEE Transactions on Multimedia, vol. 15, no. 1, pp. 41-55, Jan. 2013, doi: 10.1109/TMM.2012.2225032.

Dael, N., Mortillaro, M., & Scherer, K. R. (2012). Emotion expression in body action and posture. Emotion (Washington, D.C.), 12(5), 1085–1101. https://doi.org/10.1037/a0025737

“A 3D Facial Expression Database For Facial Behavior Research” by Lijun Yin; Xiaozhou Wei; Yi Sun; Jun Wang; Matthew J. Rosato, 7th International Conference on Automatic Face and Gesture Recognition, 10-12 April 2006 P:211 – 216

Jha, D., Gupta, V., Ward, L., Yang, Z., Wolverton, C., Foster, I., Liao, W. K., Choudhary, A., & Agrawal, A. (2021). Enabling deeper learning on big data for materials informatics applications. Scientific reports, 11(1), 4244. https://doi.org/10.1038/s41598-021-83193-1

Wen, L., Dong, Y., & Gao, L. (2019). A new ensemble residual convolutional neural network for remaining useful life estimation. Mathematical biosciences and engineering : MBE, 16(2), 862–880. https://doi.org/10.3934/mbe.2019040

McAllister, P., Zheng, H., Bond, R., & Moorhead, A. (2018). Combining deep residual neural network features with supervised machine learning algorithms to classify diverse food image datasets. Computers in biology and medicine, 95, 217–233. https://doi.org/10.1016/j.compbiomed.2018.02.008

Panahi, A., Askari Moghadam, R., Akrami, M., & Madani, K. (2022). Deep Residual Neural Network for COVID-19 Detection from Chest X-ray Images. SN computer science, 3(2), 169. https://doi.org/10.1007/s42979-022-01067-3

Sardi, L., Idri, A., & Fernández-Alemán, J. L. (2017). A systematic review of gamification in e-Health. Journal of biomedical informatics, 71, 31–48. https://doi.org/10.1016/j.jbi.2017.05.011

Morgan, L., Leatzow, A., Clark, S., & Siller, M. (2014). Interview skills for adults with autism spectrum disorder: a pilot randomized controlled trial. Journal of autism and developmental disorders, 44(9), 2290–2300. https://doi.org/10.1007/s10803-014-2100-3

Horlin, C., Falkmer, M., Parsons, R., Albrecht, M. A., & Falkmer, T. (2014). The cost of autism spectrum disorders. PloS one, 9(9), e106552. https://doi.org/10.1371/journal.pone.0106552

Ji, Qingge & Huang, Jie & He, Wenjie & Sun, Yankui. (2019). Optimized Deep Convolutional Neural Networks for Identification of Macular Diseases from Optical Coherence Tomography Images. Algorithms. 12. 51. 10.3390/a12030051.

O’Shea, K., & Nash, R. (2015). An Introduction to Convolutional Neural Networks. https://arxiv.org/pdf/1511.08458.pdf

Liu, X., Shi, H., Chen, H., Yu, Z., Li, X., & Zhao, G. (n.d.). iMiGUE: An Identity-free Video Dataset for Micro-Gesture Understanding and Emotion Analysis. Retrieved September 8, 2022, from https://openaccess.thecvf.com/content/CVPR2021/papers/Liu_iMiGUE_An_Identity-Free_ Video_Dataset_for_Micro-Gesture_Understanding_and_Emotion_CVPR_2021_paper.pdf

Kingma, D., & Lei Ba, J. (2017). ADAM: A METHOD FOR STOCHASTIC OPTIMIZATION. https://arxiv.org/pdf/1412.6980.pdf

The Wall Lab | Autism Therapy on Glass. (2019). Stanford.edu. https://wall-lab.stanford.edu/projects/autism-therapy-on-glass/

Fabian Benitez-Quiroz, C., Srinivasan, R., & Martinez, A. M. (2016). EmotioNet: An Accurate, Real-Time Algorithm for the Automatic Annotation of a Million Facial Expressions in the Wild. Www.cv-Foundation.org.https://www.cv-foundation.org/openaccess/content_cvpr_2016/ html/Benitez-Quiroz_EmotioNet_An_Accurate_CVPR_2016_paper.html

Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). ImageNet Classification with Deep Convolutional Neural Networks. Advances in Neural Information Processing Systems, 25.https://proceedings.neurips.cc/paper/2012/hash/c399862d3b9d6b76c8436e924a68c45 b-Abstract.html

Chi-Feng Wang. (2019, January 8). The Vanishing Gradient Problem. Medium; Towards Data Science. https://towardsdatascience.com/the-vanishing-gradient-problem-69bf08b15484

Stanford University. (n.d.). Autism Glass Project. Autismglass.stanford.edu. Retrieved September 8, 2022, from https://autismglass.stanford.edu/

Ayan, E., & Ünver, H. M. (2018, April). Data augmentation importance for classification of skin lesions via deep learning. In 2018 Electric Electronics, Computer Science, Biomedical Engineerings’ Meeting (EBBT) (pp. 1-4). IEEE.

About the author

Kedaar Rentachintala

Kedaar is a senior at BASIS Independent Silicon Valley in San José, CA. He is interested in machine learning, data science, and artificial intelligence applications for those on the autism spectrum to improve their motor function, social awareness, and emotional intelligence.