Author: Connor Luke Kao

Mentor: Dr. Hong Pan

Mountain View High School

Abstract

Artificial intelligence (AI) has rapidly advanced into domains once considered uniquely human, raising urgent questions about the nature and limits of machine creativity. This paper reviews current evidence by analyzing AI performance on established creativity measures such as the Alternative Uses Task (AUT) and the Torrance Tests of Creative Thinking (TTCT). Results show that models like GPT-4 rival or even surpass average human scores in fluency and originality, suggesting strong competence in combinational and exploratory creativity. However, AI still falls short in transformational creativity—the radical, paradigm-shifting insights that redefine domains—due to its lack of curiosity, anomaly detection, emotional grounding, and embodied experience. While AI can expand the idea space and act as a powerful creative collaborator, concerns remain about algorithmic homogenization and the potential downgrading of human creative confidence. Drawing on perspectives from psychology, philosophy, law, and human–AI collaboration research, this paper argues that AI’s role is best understood as that of an amplifier and assistant rather than an autonomous inventor. Ultimately, the boundary between remix and revelation highlights both the impressive potential and the enduring limitations of artificial creativity, with implications for intellectual property law, cultural production, and the future of human imagination.

Key Terms Table

- Novelty: Degree to which an idea or output differs from known examples or reference sets; can be measured statistically (semantic/image distance) or subjectively

- Transformational Creativity: Creativity that alters the defining rules, constraints, or conceptual spaces of a domain

- Combinational Creativity: Creativity achieved by combining previouslyunrelated ideas or concepts

- Algorithmic flattening: Hypothesized narrowing of creative diversity due to model training biases and averaging tendencies, reducing variance in outputs

- Torrance Tests of Creative Thinking (TTCT): Standardized test assessing divergent thinking and other creative abilities through structured tasks, scored for fluency, originality, elaboration, and flexibility.

Introduction

Throughout the entire history of the human race, creativity has been considered an exclusively human trait — a blend of imagination, intuition, and lived experience that machines cannot replicate. Now with recent advances like GPT-5, AI is expanding into domains once thought to be uniquely human, prompting renewed urgency in asking what creativity truly means. From generating images to crafting poems to producing research papers, today’s AI capabilities are raising the question of whether these AI models represent true creativity or are just recombining old human work.

First, this review clarifies and defines creativity using frameworks before jumping in and testing AI against such definitions. Tests such as the Alternative Uses Task (AUT) and Torrance Tests of Creative Thinking (TTCT) are used to examine where AI’s abilities align with human creativity and where they diverge. Finally, this review considers what the future implications are for law, collaboration, and authorship, including its impact on human creative practice.

Defining Creativity

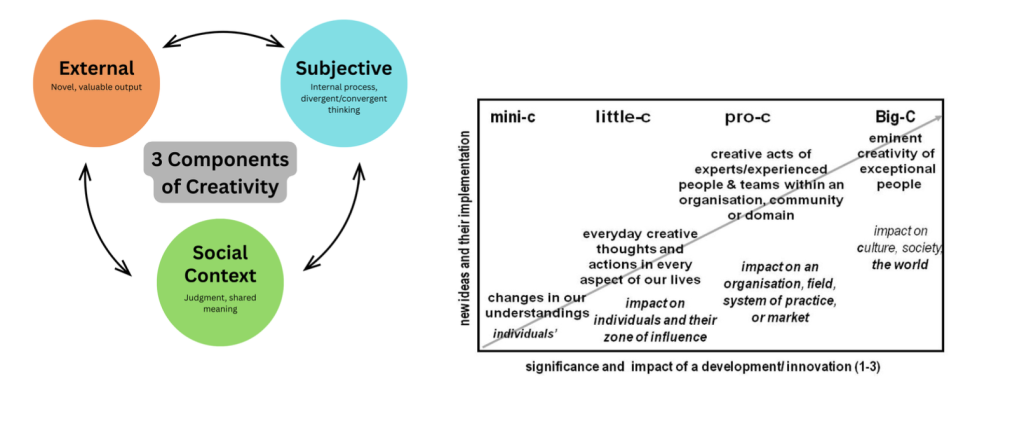

Figure 1: Frameworks of Creativity

(a) Three Components of Creativity. Creativity has external, subjective, and social dimensions: the external focus on whether an output is novel and useful, the subjective on the mental processes of insight and refinement, and the social on how communities judge value. Together, these show that creativity is not just a product but also a process shaped by context (Mammen et al., 2024). (b) The Four C’s Model. Creativity ranges in scale: mini-c (personal learning), little-c (everyday expression), Pro-c (professional innovation), and Big-C (paradigm-shifting breakthroughs). Most human and AI creativity falls in the first two levels, while Big-C remains rare (Kaufman & Beghetto, 2009). (c) Boden’s Three Types. Creativity can be combinational (rearranging ideas), exploratory (pushing within existing rules), or transformational (changing the rules entirely). AI excels at the first two, but transformational creativity is still largely human-driven (Boden, 2014). Together, these frameworks map the landscape of creativity across processes, products, and scales, providing a foundation for evaluating AI alongside humans.

Creativity as Product

At the simplest level, creativity shows up in the things people make, like a new TikTok trend or clever science project. For something to count as creative, it usually needs two components: it should be novel (new) and it should be valuable in some way (Zhou & Lee, 2024). As psychologist Anna Abraham explains, it’s not enough to just be different. A creative combination like matcha and rice might be “new,” but unless it works — tastes good, solves a problem, or moves people — it doesn’t feel creative. Margaret Boden, one of the most influential philosophers of creativity, breaks novelty into two types: P-creativity, new just to you, like inventing a new way to decorate your room, and H-creativity, new to human history, like when Einstein introduced relativity (Boden, 2014). Both matter, and both give us a way to ask: when AI generates an artwork or idea, is it doing something new just for us, or something genuinely groundbreaking?

Creativity as Process

However, creativity is more than just the final product; it’s also about the process of getting there. Psychologists J.P. Guilford and later E. Paul Torrance described this process as a balance between divergent thinking — brainstorming wild, out-of-the-box ideas, like all the weird uses you could imagine for a paperclip, and convergent thinking — narrowing them down to the ones that actually make sense (Ding & Li, 2025). Humans also bring in qualities AI lacks: things like curiosity, emotion, and those sudden “aha!” moments when a solution clicks. For visualization, picture the process of planning a vacation. Divergent thinking is brainstorming destinations, throwing out all the different, wide-ranging, and free-flowing ideas. On the other hand, convergent thinking is choosing the destination. The group considers practical constraints like budget, time off, and personal preferences to narrow the list down to the single best option.

Creativity as Scale

Finally, creativity comes in different sizes and levels. James Kaufman and Ronald Beghetto’s Four Cs model lays this out nicely. There’s mini-c, which are personal insights; little-c, which is everyday social creativity, like making up a joke in a conversation; pro-c, expert-level creativity in a career, like a coder designing a new algorithm; and finally big-c, the kind of genius that changes history like Steve Jobs or Picasso. This framework will be key later on once as we start to see where AI’s creative limits fall on this spectrum through various tests. Boden adds another helpful layer to this idea of creativity on a scale. She adds that some creativity is combinational (remixing old ideas), some is exploratory (pushing boundaries within a system), and some is transformational (changing the rules altogether) (Boden, 2014). For humans, creativity can stretch across all these levels. For AI, the story is more complicated. It’s already powerful at combinational and sometimes pro-c creativity, but whether it will ever touch big-c, transformational breakthroughs is still very much an open question.

Where and How AI’s Creativity Excels

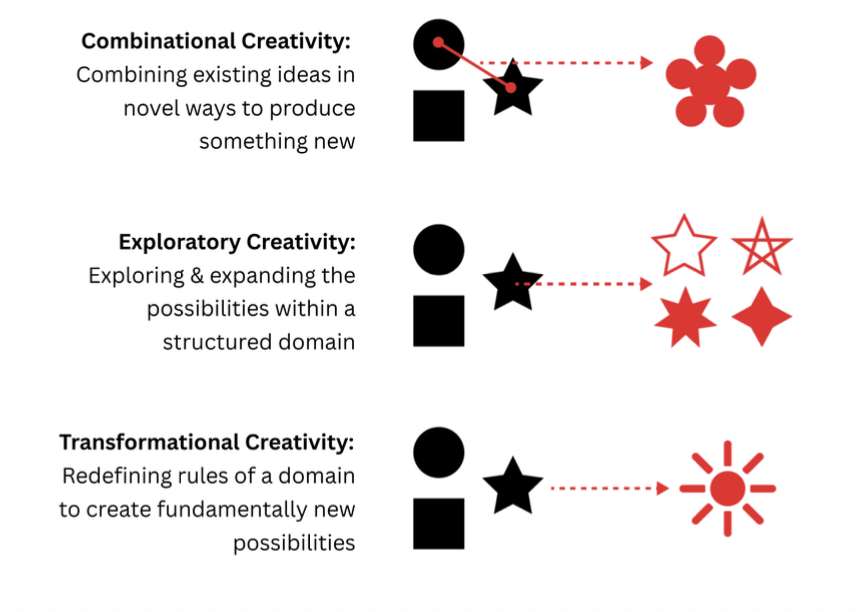

Figure 2: Standardized Tests of Creativity

(a) The Alternative Uses Task (AUT) measures divergent thinking by asking participants to propose as many uses as possible for a common object (e.g., a brick or a paperclip). Scoring emphasizes fluency, originality, flexibility, and elaboration. (b) The Torrance Tests of Creative Thinking (TTCT) use both verbal and visual prompts to assess originality, elaboration, abstractness, and resistance to premature closure. These tools remain the most widely used benchmarks for comparing human and AI creativity (Haase & Hanel, 2023).

Alternative Uses Task (AUT):

One of the simplest and most famous creativity tests is the Alternative Uses Task (AUT), first popularized by J.P. Guilford in the 1960s. The challenge is straightforward: take an everyday object, like a brick or a paperclip, and come up with as many unusual uses for it as possible. Humans usually shine here because our brains make odd connections: a brick can be a paperweight, a stepstool, or even a doorstop. What’s shocking is that today’s AI models are catching up. Where older AIs struggled to go beyond direct and obvious uses like “a knife for cutting food”, newer systems like GPT-4 produce long, creative lists that rival the best human responses. This is a significant leap from GPT-3, a model almost any human can beat. In fact, GPT-4’s top outputs in the AUT are now better than about 90% of human participants. To illustrate its significance, GPT-4 was only released a few years after GPT-3, and AI’s advancement and evolution potential does not seem to slow down anytime soon (Haase & Hanel, 2023).

Torrance Tests of Creative Thinking (TTCT):

Another more comprehensive test called the Torrance Tests of Creative Thinking (TTCT) was used to measure AI’s creativity. Designed by psychologist E. Paul Torrance, the TTCT measures different aspects of divergent thinking, like fluency (how many ideas you generate), flexibility (how different the ideas are), originality (how rare they are), and elaboration (how detailed they get). These tests have been used for decades to spot gifted kids and track creative growth over time. What’s surprising is that GPT-4 doesn’t just keep up. In some cases, it actually outperforms the human average (Haase & Hanel, 2023). For example, when scored on fluency and originality, its responses are judged as more creative than many human test-takers. We can take from these findings that AI is, without a doubt, at least starting to “look” creative, whether or not it truly is or isn’t. It’s important to note that tests like AUT and TTCT are designed only to test mini-c and little-c levels of creativity, as formulating tests for pro-c and big-c require years of domain experience. In other words, you can’t measure pro- or big-c-level creativity with a quick brainstorming task; it emerges only after long and specific training in a certain field.

What These Results Really Mean: Remix Masters, Not Rule-Breakers

So, does passing these tests mean AI is truly creative? Here’s where it gets tricky. Under Boden’s framework for the three kinds of creativity (combinational, exploratory, and transformational), we can conclude that AI is undeniably strong in the first two. It can remix patterns from its training data into fresh combinations faster than any human, which explains why it shines on tests like the AUT and TTCT (Boden, 2014). One study even shows that AI tends to expand the overall “idea space”: while its average novelty may be lower than a human’s, the maximum novelty, those rare outlier ideas, often rise when AI is in the mix (Zhou & Lee, 2024). In other words, AI sometimes surprises us with ideas no individual human would have considered, simply because it can churn through countless recombinations. Overall, these strengths are real and impressive, but they also hint at the limits we’ll uncover in the next section.

It’s also important to note that these tests were done on AI models that were accessible to the general public, like ChatGPT. Models like these are censored, limited, and hindered for obvious security reasons, meaning the clarification must be made that it’s not that Artificial Intelligence in general can’t achieve such creative thresholds, just that the restricted models that were tested were. There may be secret, unhinged AI models that reveal such capabilities.

Where AI Creativity Falls Short

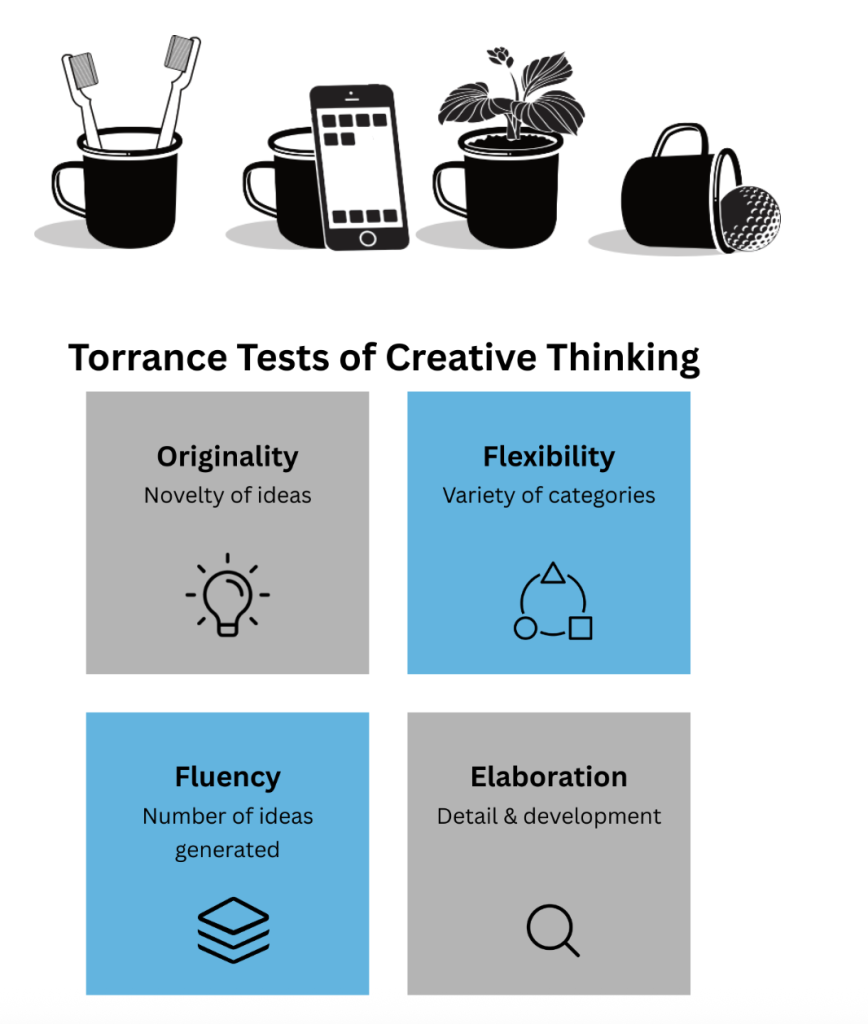

No Curiosity, No “Aha” Moments

One of the clearest limits of AI creativity is that it has no genuine curiosity or capacity for sudden epiphany. Humans often spark new ideas by noticing anomalies, that weird result in an experiment, the unexpected twist in a conversation, and asking, what if? Psychologists call this the “aha moment,” when something clicks in a way that reshapes your thinking. AI doesn’t do this. Studies show it tends to treat every output as expected and rarely adjusts when evidence contradicts its assumptions (Ding & Li, 2025). Simply, it’s stubborn. Humans notice when the math doesn’t add up or when an experiment produces something strange, and that sparks new theories. AI, by contrast, often doubles down on its first guess; confident, but wrong. If Einstein had been like today’s AI, he would have ignored the oddities in Newton’s physics and kept grinding out the same equations. Without curiosity or the instinct to pivot from anomalies, AI struggles to produce the kind of breakthroughs that drive transformational creativity.

Take a look at how AI is actually being used in climate science for example. Google’s NeuralGCM can process 40 years of weather data and match existing climate models for accuracy, but it still struggles with the extremely unexpected. As one NASA climate scientist notes, climate models face the challenge of “simulating conditions more extreme than any previously observed” (The Economist, 2024). This is exactly the kind of anomaly detection that drives scientific breakthroughs. It’s bounded by its known parameters, and while it may excel within that system, it means that it will struggle when reality doesn’t match its training data or the expected.

Figure 3: This flow chart contrasts AI’s process (pretrained knowledge → pattern matching → limited hypothesis set → no anomaly detection) with human scientific reasoning (observation → anomaly detection → hypothesis → experiment/revision). It illustrates why AI lacks “aha moments” and curiosity.

No Emotional Evaluation or Embodied Knowledge

Creativity is also tied to our emotions and our bodies. A painting feels powerful not just because of how it looks, but because it stirs something in us, emotions like awe, joy, or even discomfort. Humor works the same way: a joke lands because it makes your chest tighten with laughter. As scholars point out, AI lacks both emotional evaluation and embodied knowledge (Slack, 2023). It can generate jokes or images that look like art or humor, but it doesn’t know why they matter, why they’re moving, or why they’re funny. Why does this matter, and how does it connect to its creative abilities? Without a body to experience the world, the shiver of cold water, the ache of heartbreak, the thrill of scoring a goal, it misses a core ingredient of human creativity. This absence makes AI outputs impressive on the surface but hollow underneath. It lacks a soul, a voice, that is vital for the human creativity experience. This is more a philosophical argument rather than a technical one, as it pushes the idea of an entity lacking creative abilities because it lacks the emotional capabilities to create.

Homogenization and the Downgrading of Human Creativity

Finally, there are real concerns about how AI affects our creativity. With overusage and overreliance of AI, research suggests that people are beginning to lose their curiosity and confidence in their own ideas. Teenagers, for instance, have reported that their writing feels less original after leaning heavily on AI prompts. We can attribute this to a concept known as algorithmic flattening. This is the process by which algorithms reduce a complex, multidimensional entity or concept into a single, simplistic metric or ranking, often losing critical context and nuance in the process. As a result, creative outputs start to look and sound the same, narrowing our imaginative and creative angles (Mammen et al., 2024; Haase & Hanel, 2023). Instead of opening up new directions for possibility, overreliance on AI risks nudging both individuals and society toward sameness and conformity. This tendency makes sense for AI models. They are trained to spit out the most probable or most likely answer from their training data. What computer wouldn’t go against the odds? In contrast, humans often make decisions that defy probability or logic, and it is precisely these imperfect, unexpected leaps that ironically give humans that true sense of individuality and creativity.

Future Implications and Conclusion

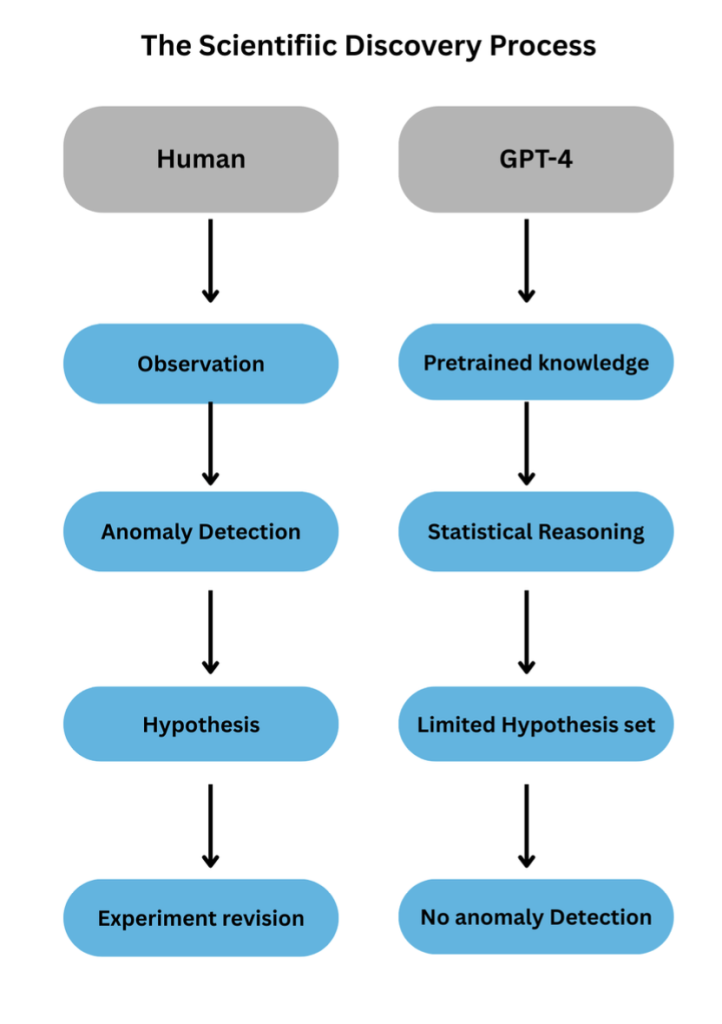

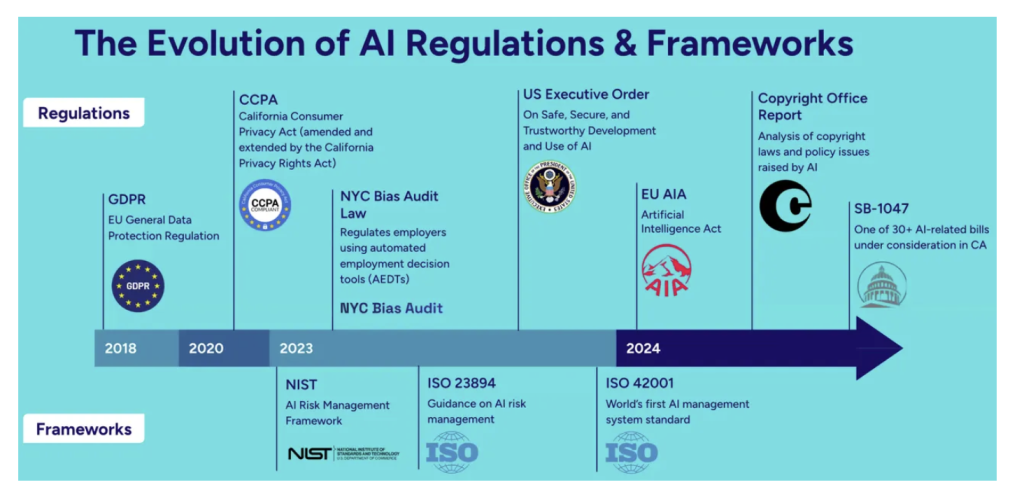

Figure 4: The Evolution of AI Regulations & Frameworks

This timeline illustrates key legal landmarks shaping AI and creative ownership. From early privacy laws like GDPR, it progresses through the U.S. Copyright Office’s formal reaffirmation that only “human-authored” works qualify for copyright, exemplified by rulings such as Thaler v. Vidal and the rejection of AI-generated entries. It also notes the emerging framework of the EU’s Artificial Intelligence Act, which extends obligations—such as respecting opt-out provisions under the EU Copyright Directive—to AI providers globally, affecting how GPT-style systems train their models. These milestones highlight how lawmakers are actively defining what counts as creative ownership in the age of AI (Vartabedian, 2025).

Creativity and the Law: Who Owns AI Art?

The law is still playing catch-up with AI’s creative boom. In the U.S., U.K., and E.U., copyright and patent systems are still firmly confined to human authorship. For example, The U.S. Copyright Office, states plainly: “an original work of authorship must be created by a human author”, which is why attempts to register AI-only works have all been denied. Patent rulings, like Thaler v. Vidal, reinforce this idea. The inventor must be human. This is as much a philosophical tension as a legal one. Policymakers will have to decide whether we should protect creativity because of the process (the human imagination, emotional meaning, and social context) or the product (the output itself, no matter how it was made) (Mammen et al., 2024; Haase & Hanel, 2023). The stakes of these decisions are enormous. The New York Times is currently suing Microsoft and OpenAI for billions of dollars,” claiming ChatGPT can reproduce their journalism “word for word.” OpenAI counters that this “regurgitation” is just a rare bug, but the case reveals a fundamental tension: if AI can perfectly reproduce copyrighted work, is it truly creating something new, or just performing sophisticated plagiarism? How lawmakers answer this will shape the creative economy for decades to come (The Economist, 2024).

Human-AI Collaboration: Designing for Co-Creation, Not Replacement

Right now, most human-AI teamwork is surprisingly shallow. Studies find that the dominant patterns are things like “AI-first”, which is where the AI makes a prediction and the human supervises; or “AI-follow”, where the human leads and the AI supports. True collaboration is rare. But more dynamic possibilities exist: “request-driven” help (asking AI-specific questions), “AI-guided dialogue” (AI keeps a creative back-and-forth alive), and “user-guided adjustments” (the human actively reshapes AI outputs). What about the risks? They’re real, as people can fall into cognitive biases like anchoring and confirmation biases. The true danger is a loss of agency where humans give up control of the creative process. The challenge for future AI developers and users is to design and use AI in a way that empowers humans rather than numbing them (Gomez et al., 2025). Some collaborations already show this potential. Board game designer Alan Wallat used AI to play his game ‘Sirius Smugglers’ thousands of times, discovering a rule flaw that could make games run indefinitely—something human playtesters might have missed or taken much longer to find (The Economist, 2025). Here, AI served as a powerful testing tool while Wallat retained creative control over the design and final decisions. Scientific Discovery: Incremental vs. Transformational Innovation Science gives us another lens on AI’s limits. We’ve covered how current generative models are excellent at incremental discoveries: recombining existing knowledge and running through known hypothesis spaces, but stumbling on the kind of transformational breakthroughs that come from curiosity and anomaly detection (Ding & Li, 2025). What does this mean? AI makes for a great lab assistant, it’s fast, tireless, and precise, but not yet a scientific revolutionary. It can help us test thousands of variations of a drug molecule, but it won’t suddenly “guess” DNA’s double helix or imagine relativity out of the blue. For now, only humans are capable of those flashes of genius.

Acknowledgments

The author would like to acknowledge Dr. Pan for his support and guidance during the research and publishing of this paper.

References

Boden, M. A. (2014). Creativity and Artificial Intelligence: A Contradiction in Terms? In The Philosophy of Creativity: New Essays. Oxford Academic. https://academic.oup.com/book/6463/chapter/150310938

Ding, A. W., & Li, S. (2025). Generative AI lacks the human creativity to achieve scientific discovery from scratch. Scientific Reports, 15(1), 9587. https://doi.org/10.1038/s41598-025-93794-9

Kaufman, J. C., & Beghetto, R. A. (2009). Beyond Big and Little: The Four C Model of Creativity. Review of General Psychology, 13(1), 1–12. https://doi.org/10.1037/a0013688

Mammen, C., Collyer, M., Dolin, R. A., Gangjee, D. S., Melham, T., Mustaklem, M., Sundaralingam, P., & Wang, V. (2024). Creativity, Artificial Intelligence, and the Requirement of Human Authors and Inventors in Copyright and Patent Law. SSRN Electronic Journal. https://doi.org/10.2139/ssrn.4892973

Slack, G. (2023). What DALL-E Reveals About Human Creativity | Stanford HAI. https://hai.stanford.edu/news/what-dall-e-reveals-about-human-creativity The Economist. (2024). Artificial intelligence is helping improve climate models.

The Economist. https://www.economist.com/science-and-technology/2024/11/13/artificial-intelligence-is-h elping-improve-climate-models

The Economist. (2024). Does generative artificial intelligence infringe copyright?, Does generative artificial intelligence infringe copyright?

The Economist. https://www.economist.com/the-economist-explains/2024/03/02/does-generative-artificial -intelligence-infringe-copyright The Economist. (2025). How artificial intelligence can make board games better.

The Economist. https://www.economist.com/science-and-technology/2025/02/26/how-artificial-intelligenc e-can-make-board-games-better

Vartabedian, M. (2025). AI Governance: When In Doubt, Ask. Then Ask Again. No Jitter. https://www.nojitter.com/ai-automation/ai-governance-when-in-doubt-ask-then-ask-again-

Zhou, E., & Lee, D. (2024). Generative artificial intelligence, human creativity, and art. PNAS Nexus, 3(3), pgae052. https://doi.org/10.1093/pnasnexus/pgae0

About the author

Connor Luke Kao

Connor is a high school senior interested in cognitive science and exploring how AI can help future sports and business applications. He’s a competitive soccer player and enjoys creating spray paint murals in his free time. Through various business programs, he’s gained entrepreneurial experience reselling clothes on Depop and founding The Harvesting Sustainability Project, a community initiative that harvests overgrown produce from local neighborhoods and repurposes and redistributes it to those in need.