Author: Alexander Tian

Mentor: Dr. Hong Pan

Scarsdale High School

Abstract

Evolutionary and biological processes have been studied for ages, and have been extremely influential in the creation of machine learning approaches, including neural networks and genetic algorithms. In this paper, an approach that combines the essence of these two algorithms, named NeuroEvolution of Augmenting Topologies (NEAT) is reviewed. We explain how the approach functions, its advantages, disadvantages, and potential future directions in development from robotics to self-driving vehicles.

Keywords

- Network Topology: The structure, arrangement, and interconnection of neurons or nodes within a neural network.

- Genotype: The genetic makeup of the neural network.

- Phenotype: The observable behaviors or characteristics of a neural network.

- Loss Function: A function that quantifies the accuracy of predicted values versus actual values in a model, used for optimization purposes during the training of a model.

Introduction

Neural networks and genetic algorithms are systems that are fundamentally based on structures and processes found in biological systems. They have existed for decades, and have both had a major influence on the world and technological development.

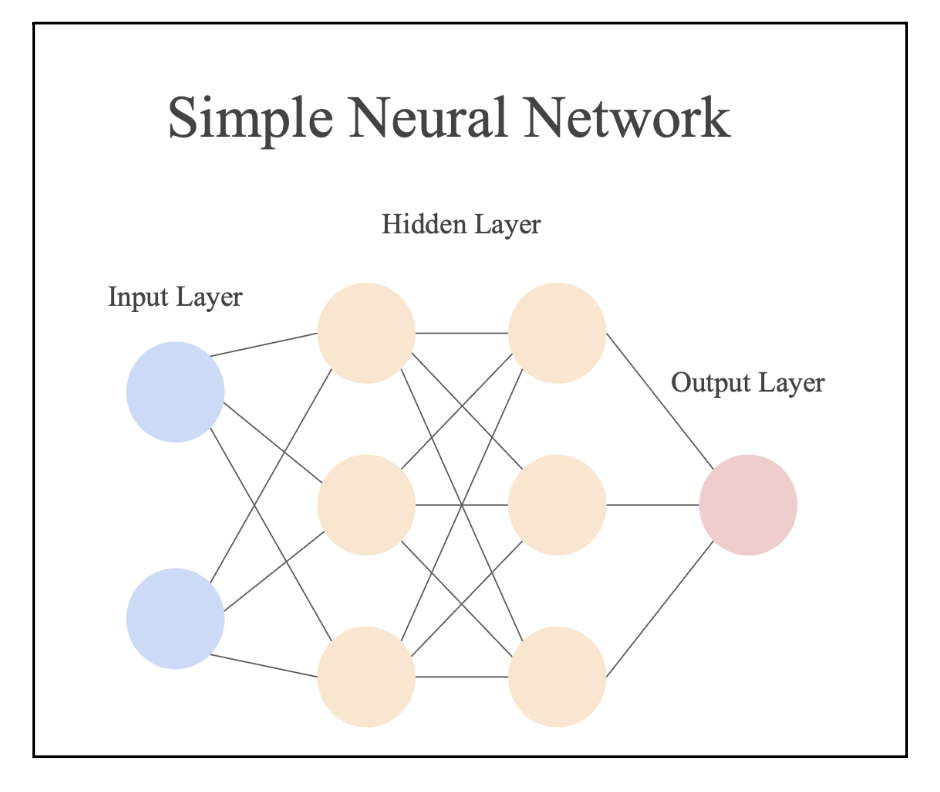

Neural networks are designed to simulate neurons in the human brain such that computers can efficiently learn and make decisions. They are normally implemented with layers of neurons and connections in between, with each neuron having its own weight and bias as inputs and outputs to and from other neurons as shown in Figure 1.

Through certain calculations, the weights and biases in the network are able to be altered and improve the network performance. By training over a plethora of data, a neural network with an adequate structure and loss function for a specific problem can become very accurate and efficient. Neural networks have many forms and variations, and have excelled in many areas such as image classification, speech recognition, natural language processing and more (IBM. (n.d.).

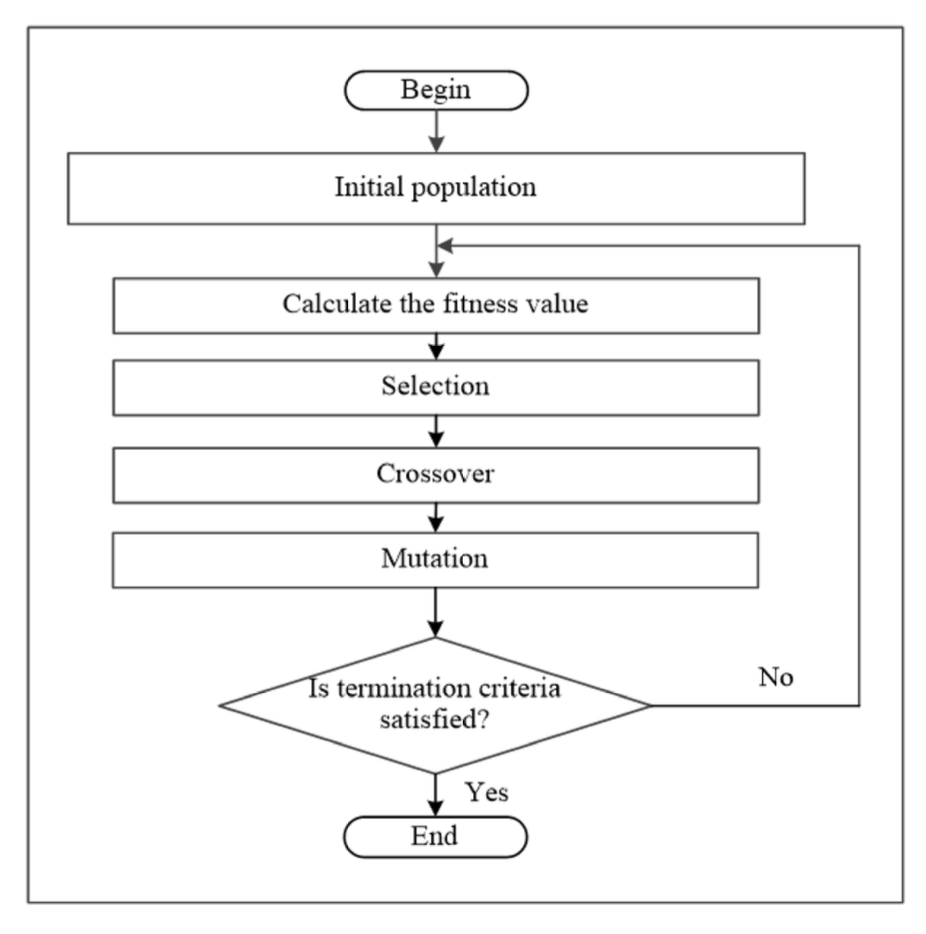

Similarly, genetic algorithms follow biological processes and tackle problems based on the principles of evolution and genetics. They were first developed by John Holland and his collaborators in the 1960s to 1970s and usually follow a process that contains the steps: creating a population, evaluating the population by a fitness function, selection of the fittest genes, reproduction to create offspring, mutation of genes, replacement with new offspring and termination (Yang, 2014).

While neural networks can be very efficient given that the derivative of a loss function can be calculated, genetic algorithms do not require one; thus, they can be able to handle more complex problems with vast search spaces and complex solutions that can be implemented as easily as a neural network.

NeuroEvolution of Augmenting Topologies (NEAT) integrates these two systems—garnering beneficial qualities of both to effectively tackle problems. NEAT was developed by Kenneth O. Stanley and Risto Miikkulainen in 2002 at the University of Texas in Austin, incorporating the idea of evolution from genetic algorithms to progressively improve neural network structures, also known as topologies (Stanley & Miikkulainen, 2002). It leverages the efficiency of genetic algorithms to evolve and optimize networks, and the decision making capability of neural networks to direct the evolution.

The Algorithm

NEAT follows a similar procedure to standard genetic algorithms with further consideration for the aspect of artificial neural networks.

1. Initialization

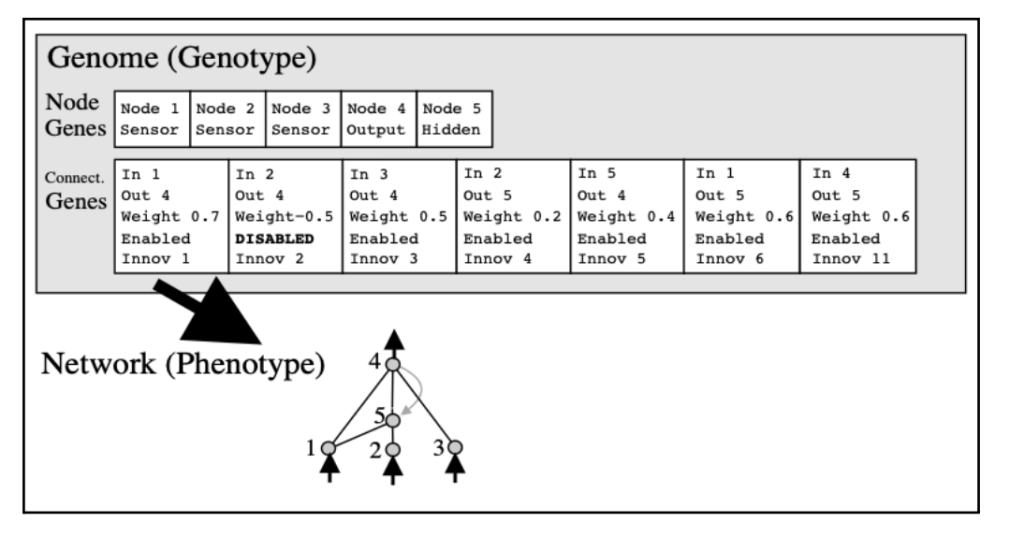

First, neural networks are designed with constant input and output layers to create the initial population. Although the topology of the neural network will evolve, the input and output layers stay constant. The neurons are represented with information such as connections, weights, and an innovation number. The innovation number is useful for identifying unique aspects of the neural network, so neural networks can be easily compared, and unique neural networks can be distinguished from others (Stanley & Miikkulainen, 2002).

Next, a fitness function must be created to evaluate the neural network and determine if one network is better than the other. While standard neural networks alter weights and biases through backpropagation, NEAT mutates the weight and biases in a later step and only requires a standard fitness function to compare networks (Stanley & Miikkulainen, 2002).

2. Speciation

This next stage of the algorithm aims to preserve unique neural network topologies. Here, similar neural networks are grouped as a species based on different metrics such as the aforementioned matching node innovation numbers.

3. Reproduction / Crossover

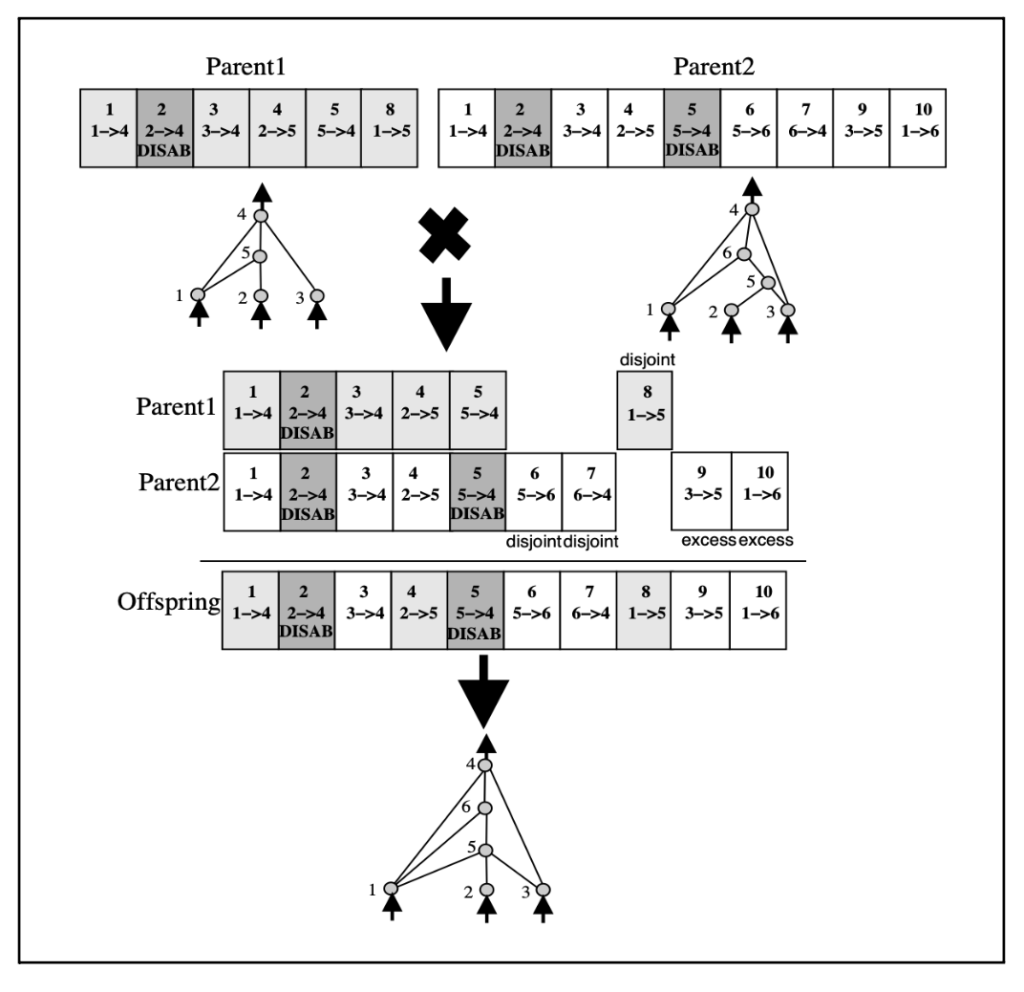

During crossover, networks in the same species have a chance to produce offspring which have mixed information and attributes from the parents to create a new neural network (Stanley & Miikkulainen, 2002). Crossover is done by:

- Selecting a dominant parent

- Find all connections shared by both neural networks by finding matching innovation numbers

- Randomly give the child some of these connections that are shared between the parents

- Inherit all unshared connections from the fittest parent

4. Mutation

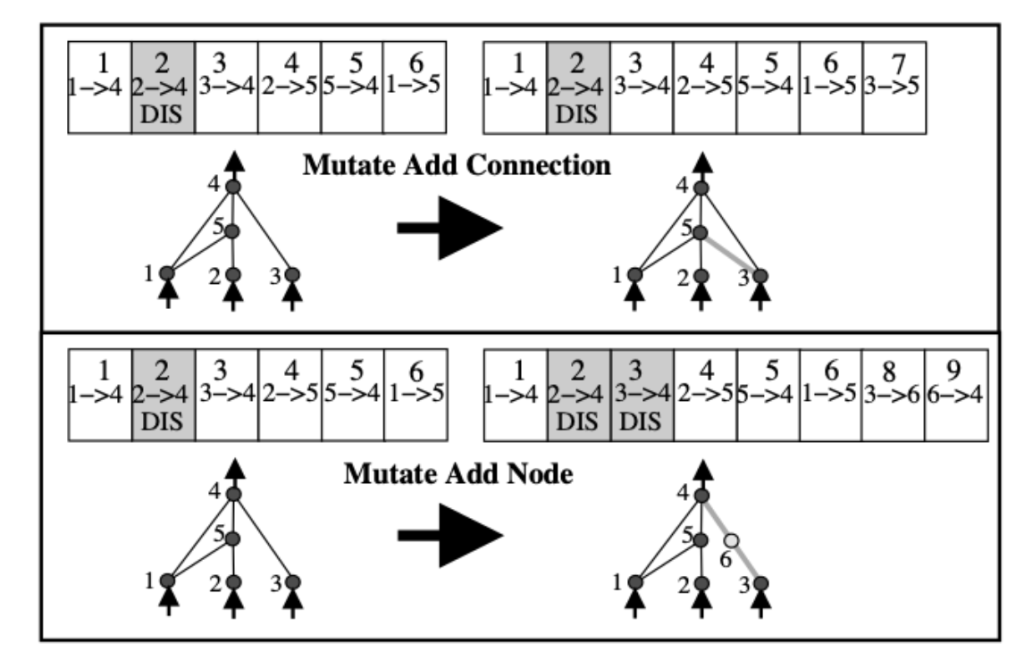

During the process of mutation, some neural networks will undergo random mutations. These mutations can add new connections between nodes or introduce new nodes, thereby increasing the complexity of the neural network (Stanley & Miikkulainen, 2002). Either:

- Add a connection between two unconnected nodes

- Add a node between two nodes that are already connected, disabling the old connection and creating two new ones to preserve the structure of the network.

- Change the weight of a connection between nodes

5. Create a New Generation with offspring

During this stage, some of the neural networks from each of the old species, determined in the speciation step, are discontinued. The new offspring that have been produced through crossover and mutation are now added to the new generation.

Advantages

1. Versatility

Similar to a standard genetic algorithm, a core advantage of NEAT over standard neural networks is that it doesn’t require a gradient to be calculated, and doesn’t require a differentiable loss function—meaning that the algorithm can function better in more complex environments, and is much more versatile (Stanley & Miikkulainen, 2002). NEAT can be applied to a wide range of optimization problems, including reinforcement learning and control, data classification, and function approximation. It doesn’t rely on the problem being differentiable, so it can be applied to problems that are hard for gradient-based methods to solve.

2. Adaptable

Compared to standard genetic algorithms, NEAT also has the advantage of being able to use neural networks to form complex outputs based on certain inputs. NEAT is able to effectively evolve the network topology without human intervention; thus, it is highly adaptable and can evolve networks that perfectly match the complexity of the problem. In the original paper describing NEAT by Kenneth O. Stanley and Risto Miikkulainen, a pole balancing test was conducted to demonstrate the performance of the algorithm. In one pole balancing test, NEAT showed performance on par with another evolutionary neural network algorithm, ESP, yet it was able to adapt to the problem and minimize its neural network much more efficiently. While ESP developed networks using 10 hidden nodes, NEAT was able to consistently create networks with 0-4 hidden nodes. Furthermore, in another pole balancing test, NEAT was 5 times faster than ESP due to its ability to evolve the network topology (Stanley & Miikkulainen, 2002).

3. Innovation Protection

Another unique advantage of NEAT is its innovation protection. Through speciation, NEAT gives innovative but potentially weaker solutions a chance to improve before they are competed out of the population, protecting innovation, maintaining diversity, and hence pushing the evolution towards better solutions (Stanley & Miikkulainen, 2002).

In essence, the NEAT’s biologically inspired mechanisms offer advantages such as versatility, adaptability to problems, and innovation protection in its solutions.

Disadvantages

1. Speed

The main disadvantage of NEAT is how slow it is compared to a traditional neural network, as it follows the procedure of a genetic algorithm and doesn’t use gradients. Genetic algorithms and algorithms such as NEAT can require many iterations and generations to evolve an effective network.

2. Hyperparameters

While NEAT automatically evolves network topology, as it is similar to a genetic algorithm, it still has a number of hyperparameters such as mutation rates, population size, and more, requiring tuning for optimal performance (Yang, 2014). Fine-tuning these parameters is often a non-trivial task raising issues of increased complexity.

3. Other Algorithms

Furthermore, while NEAT is adaptable to many problems and domains due to its flexible nature, other algorithms and neural networks may be more desirable in certain situations.

Potential Applications

NEAT has many potential applications in the real world. Below are a few demonstrations of NEAT in important fields / domains.

Robotics

NEAT can be applied to the design and optimization of neural networks that control robots and autonomous systems. By evolving network topologies that can effectively process sensory inputs and make decisions, NEAT can contribute to the development of robots that can navigate complex environments, perform tasks, and adapt to changing circumstances.

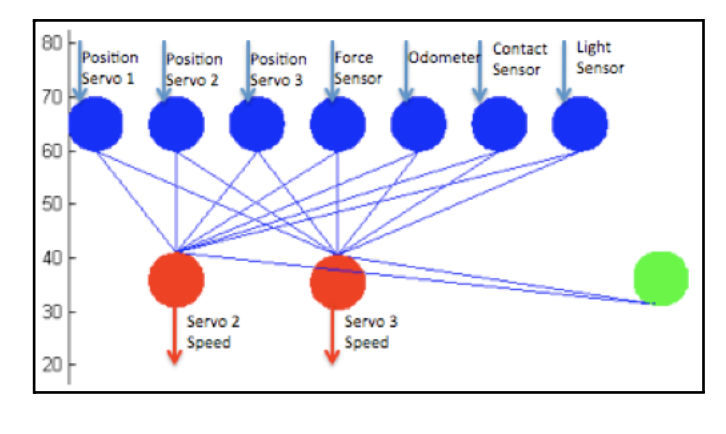

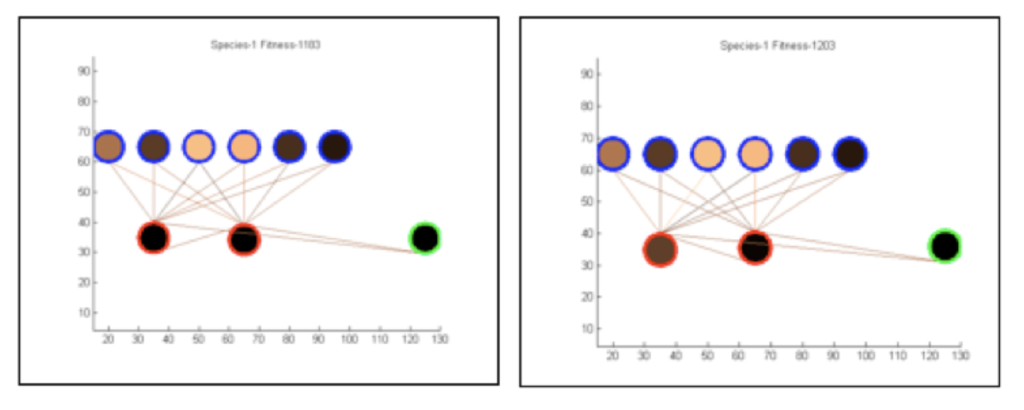

In a paper studying NEAT by Roby Velez, NEAT was applied to have a crawling robot move both forwards and backwards in order to follow a light. The network that controlled the robot had seven inputs corresponding to sensors on the robot, and two outputs corresponding to servos as shown in Figure 6 (Velez, n.d.).

Although a physical robot was created as a means of demonstration, computer simulations of the robot were used with NEAT to evolve the networks faster. Four different experiments were conducted, with different parameters and NEAT demonstrated that it could develop complex behaviors and motion, and successfully accomplish the given problem of following the light. Furthermore, with an experimental group that seeded the initial population with networks which could crawl forwards, NEAT evolved the simulated robots to a much higher fitness rating in his tests (Velez, n.d.).

Due to NEAT being able to evolve its neural network topology to tackle a specified problem, it could innovate unique and complex behaviors that do not require a human to develop. In the realm of robotics, this can be important where certain behaviors and motions need to be learnt and can be difficult to directly program into a robot.

Agents in Games

NEAT offers significant potential as a game playing agent in video games, especially in those with dynamic and complex environments that may not be tackled by traditional approaches. While conventional methods to design game playing agents typically rely on predefined rules and strategies—which may be restrictive in intricate and ever-changing game settings—NEAT provides a refreshing alternative. By harnessing evolutionary principles, NEAT evolves neural networks that empower these agents to adapt, learn from their experiences, and continually enhance their performance through successive generations.

NEAT also has the capability to discover unconventional strategies that human developers might not have envisioned. For example, NEAT was able to discover a complex and unconventional strategy of mobility in the game of Othello (Andersen et al., 2002). The predominant strategy of positional play was challenged by the mobility strategy that NEAT developed, a strategy in which giving up short term advantages for future prospects was advantageous. While the unique strategy NEAT developed was not able to defeat the more traditional approached minimax algorithm, its discovery of such a strategy demonstrates NEAT’s ability for exploring novel avenues of play (Andersen et al., 2002).

Financial Modeling

NEAT can be employed to create predictive models for financial markets and economic trends. The algorithm can evolve neural networks capable of analyzing historical data, identifying patterns, and making informed predictions about market behavior. Such models could aid in decision-making for investments and risk management.

Self Driving Vehicles

Self-driving vehicles represent an extremely important, applied field of AI and robotics, and NEAT could play a significant role in advancing their development. NEAT’s ability to evolve neural network topologies can contribute to creating robust and adaptable autonomous driving systems.

Currently, neural networks and reinforcement learning are generally used to train self-driving vehicles, and one such algorithm is Q-Learning. Q-Learning is a reinforcement learning algorithm that aims to maximize cumulative rewards by updating Q-values (Ishtiaq et al., 2022). In other words, it is an approach that iteratively trains a model based on its actions and results.

In a comparative study between Q-Learning and NEAT by Arhum Ishtiaq et al., NEAT was found to be much more effective in simple environments while Q-Learning’s effectiveness varied throughout different environments. However, NEAT faced more difficulty in complex environments, such as having to handle sharp turns, and this could be due to the fact that NEAT starts with smaller networks and must gradually evolve them. NEAT’s challenge in this example illustrates the necessity to optimize the algorithm and tune its hyperparameters for effectiveness and better performance (Ishtiaq et al., 2022).

Conclusion

In conclusion, NeuroEvolution of Augmenting Topologies (NEAT) is a powerful algorithm that bridges the worlds of neural networks and genetic algorithms, offering unique advantages and potential applications. NEAT leverages the evolutionary principles of genetic algorithms to progressively improve the topology of neural networks, making it highly adaptable and suitable for a wide range of applications. While NEAT has its limitations, its potential can still be explored in many domains, and is also being tinkered with to produce new algorithms such as HyperNEAT.

Ultimately, NEAT represents an interesting approach to problem solving and learning in AI, and has the potential to continue making significant contributions to various domains where its unique abilities could be leveraged.

References

Albadr, M. A., Tiun, S., Ayob, M., & AL-Dhief, F. (2020). Genetic Algorithm Based on Natural Selection Theory for Optimization Problems. Symmetry, 12(11), Article 11. https://doi.org/10.3390/sym12111758

Andersen, T., Stanley, K., & Miikkulainen, R. (2002). Neuro-Evolution Through Augmenting Topologies Applied To Evolving Neural Networks To Play Othello.

Ishtiaq, A., Anees, M., Mahmood, S., & Jafry, N. (2022, September 19). Comparative Study of Q-Learning and NeuroEvolution of Augmenting Topologies for Self Driving Agents. arXiv.Org. https://arxiv.org/abs/2209.09007v1

Stanley, K. O., & Miikkulainen, R. (2002). Evolving Neural Networks through Augmenting Topologies. Evolutionary Computation, 10(2), 99–127. https://doi.org/10.1162/106365602320169811

Velez, R. (n.d.). Using NEAT to teach a crawling robot to follow a light. https://www.cs.swarthmore.edu/~meeden/cs81/s09/finals/Roby.pdf

What are Neural Networks? | IBM. (n.d.). Retrieved August 4, 2023, from https://www.ibm.com/topics/neural-networks

Yang, X.-S. (2014). Chapter 5—Genetic Algorithms. In X.-S. Yang (Ed.), Nature-Inspired Optimization Algorithms (pp. 77–87). Elsevier. https://doi.org/10.1016/B978-0-12-416743-8.00005-1

About the author

Alexander Tian

Alexander is currently a high school student interested in STEM — in particular, Computer Science. In the past few years he has undertaken several programming projects during his free time—one notable project being a superhuman chess engine. Apart from STEM and programming, Alexander also plays chess competitively and enjoys playing the piano.